docs4IT

IT organizations are continuously challenged to deliver better IT services at lower cost in a turbulent environment. Several management frameworks have been developed to cope with this challenge, one of the best known being the IT Infrastructure Library (ITIL).

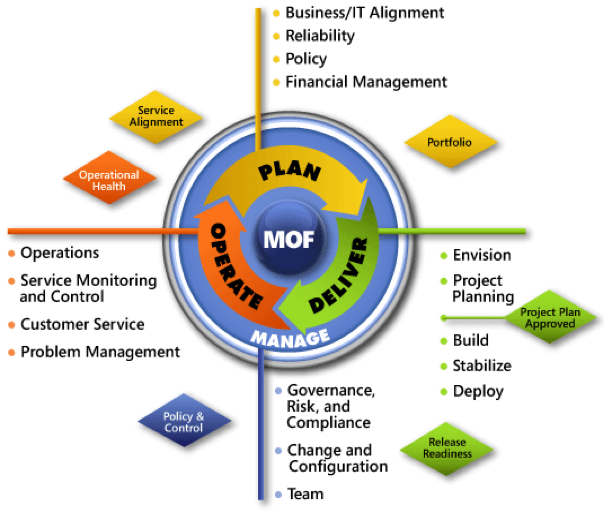

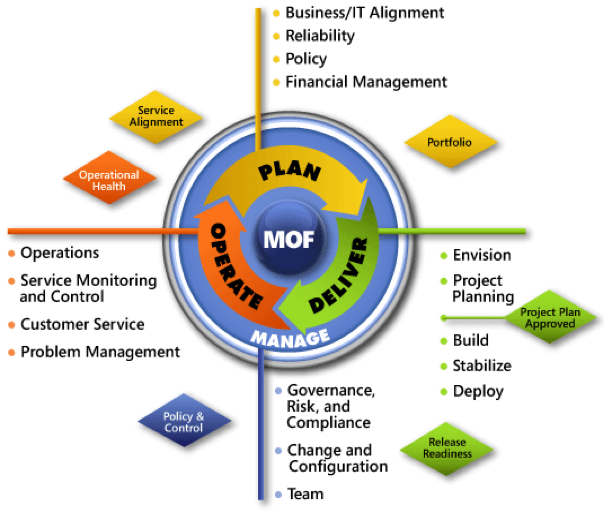

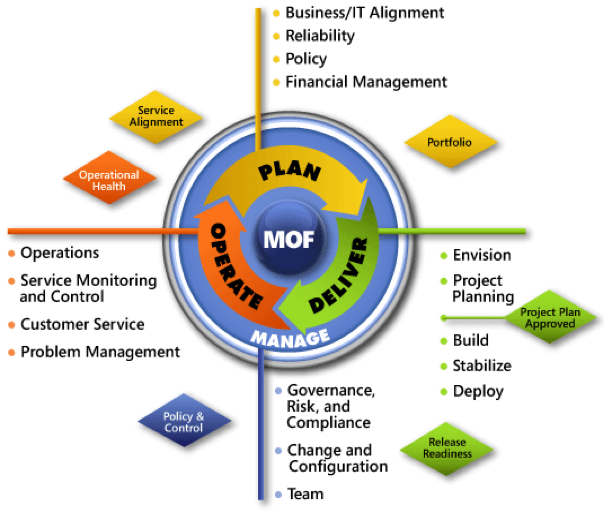

Microsoft® Operations Framework (MOF) is Microsoft’s structured approach to the same goal as ITTL.

The analysis follows a number of management paradigms that have proven to be essential to IT Service Management:

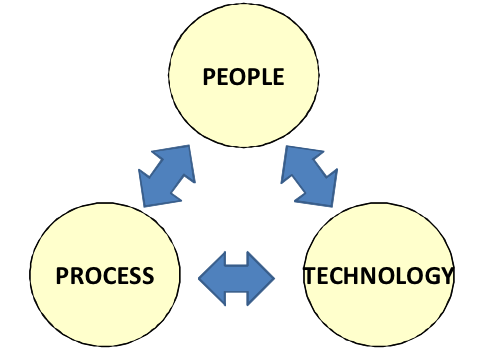

- Process, People, and Technology (PPT)

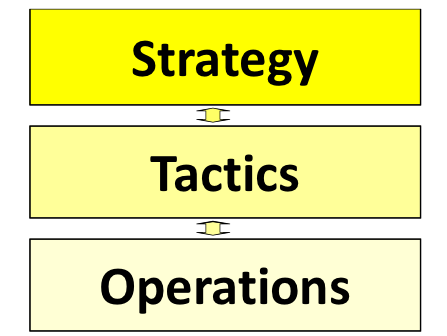

- Strategy, Tactics and Operations (STO)

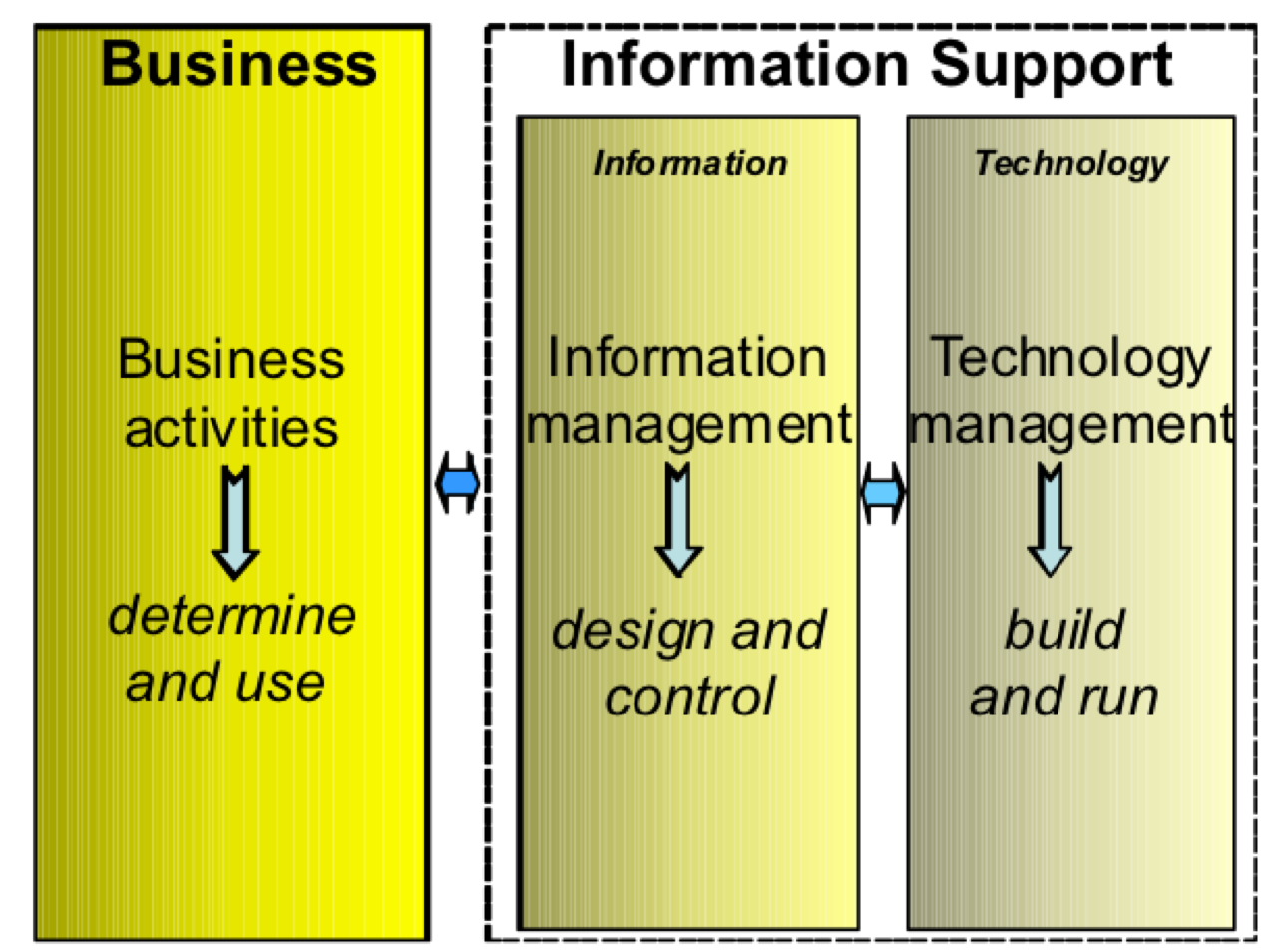

- Separation of Duties (SoD)

- The Strategic Alignment Model Enhanced (SAME)

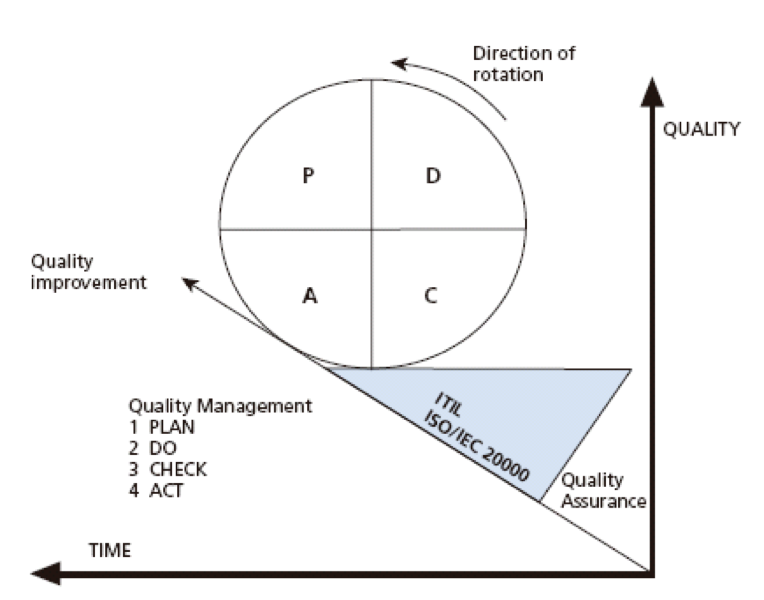

- Deming’s Plan-Do-Check-Act Management Cycle

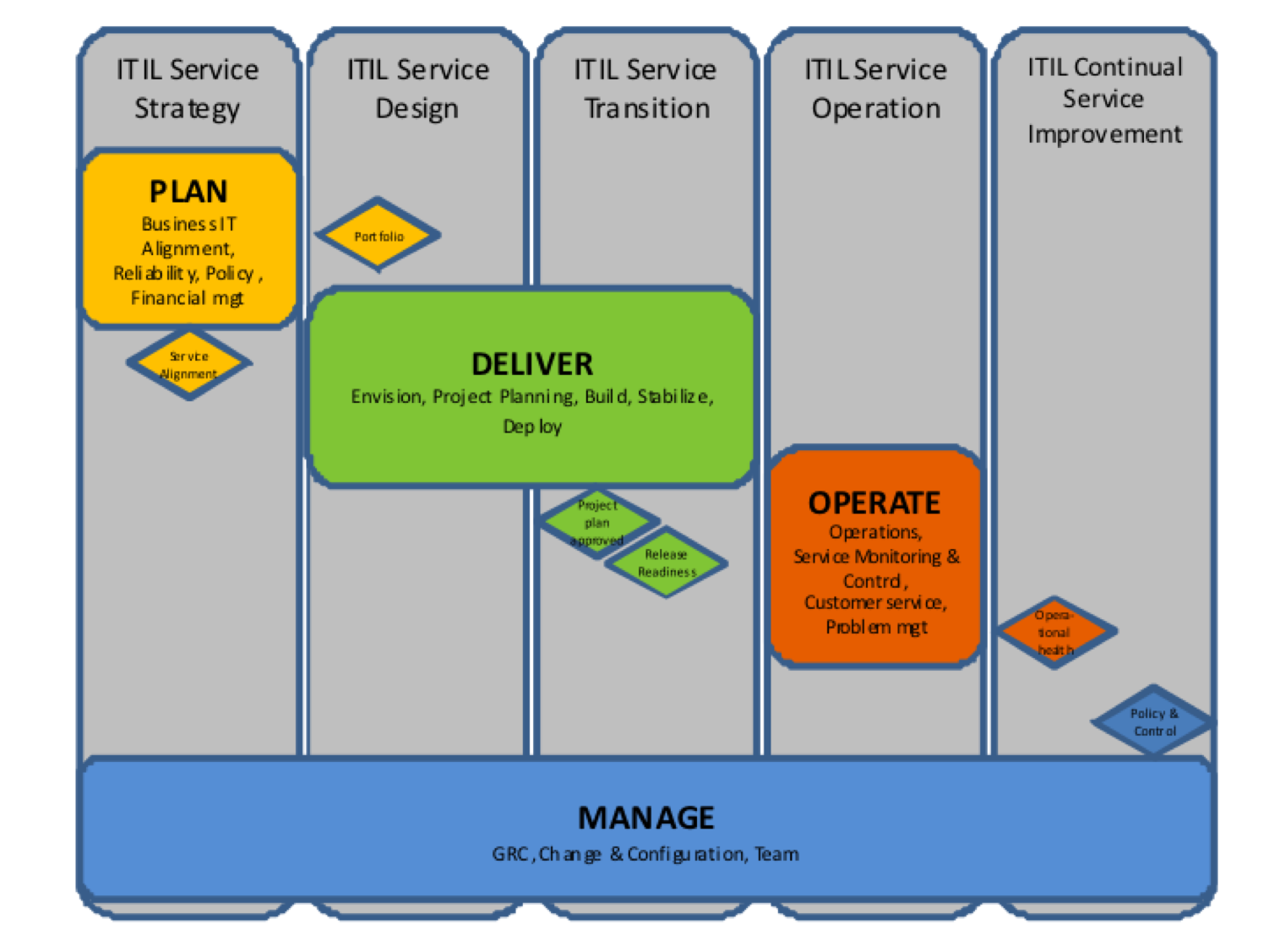

At the highest level, both frameworks follow a lifecycle approach, but these lifecycles are somewhat different. ITIL uses five elements for its lifecycle: Strategy, Design, Transition, Operation, and Continual Improvement, which brings it close to the PDCA model. MOF’s lifecycle core consists of only three phases: Plan, Deliver, and Operate, with one underlying layer (Manage) covering the components that apply to all lifecycle phases.

Both ITIL and MOF use processes and functions as building blocks, although the emphasis differs significantly. ITIL labels most of its components as processes and activities (ITIL has 26 Processes and four functions), while MOF is almost entirely based on Service Management Functions (SMFs), each SMF having a set of key processes, and each process having a set of key activities. This rigid structure supports consistency throughout the framework.

In both frameworks, control of the lifecycle progress runs through a number of transition milestones. These milestones have been made very explicit in MOF’s Management Reviews (MRs). Both frameworks apply the PDCA improvement approach throughout the lifecycle. MOF, like ITIL, offers best-practice guidance that can be followed in full but also in part, for addressing a subset of local problems. The “ITSM language” is quite consistent between both frameworks, with only minor differences. But there also are significant differences between the two frameworks.

A remarkable difference is the way customer calls are handled: ITIL separates incident calls from operational service requests and change requests, and MOF combines several customer request types in a single Customer Service SMF. ITIL and MOF also use very different role sets and role titles. This is largely due to the difference in starting points: ITIL works from the best practices documented in each phase, where MOF starts from a structured organization perspective. An area of significant difference can be found in the approach the two frameworks take to technology. A key element of ITIL is that it is both vendor- and solution-agnostic—meaning, the practices encouraged by ITIL can be applied across the board regardless of the underlying technology. The result is that ITIL focuses on the management structure that makes IT successful, rather than on the technology.

Distinctly different, Microsoft has created MOF to provide a common management framework for its platform products, although MOF can easily be used for other platforms.

Another difference is that ITIL is available in five core books that are sold through various channels, while MOF is available on the internet for free, offering practical guidance in various formats. As a consequence, ITIL copyright is highly protected, where Microsoft made MOF content available under the Creative Commons Attribution License, which makes it freely available for commercial reuse.

Finally, ITIL offers a complex certification scheme for professionals, where Microsoft currently limits its certification for MOF to just one MOF Foundation examination. At the time of this writing, plans for further certifications are under consideration, but no final decisions have been made.

The ITIL certification scheme is much more extensive, and, in effect, offers a qualification structure that can offer a potential career path for IT professionals.

Both frameworks show plenty of similarities and can be used interchangeably in practice. Both also have some specific features that may be of good use in a specific case. Main focus of ITIL is on the “what,” where MOF concentrates on the “what” as well as the “how.”

In theory there is no difference between theory and practice. In practice there is.

What is ITIL?

ITIL offers a broad approach to the delivery of quality IT services. ITIL was initially developed in the 1980s and 1990s by CCTA (Central Computer and Telecommunications Agency, now the Office of Government Commerce, OGC), under contract to the UK Government. Since then, ITIL has provided not only a best practice based framework, but also an approach and philosophy shared by the people who work with it in practice.

ITTL - The Service Lifecycle

ITIL Version 3 (2007) approaches service management from the lifecycle of a service. The Service Lifecycle is an organization model providing insight into the way service management is structured, the way the various lifecycle components are linked to each other and to the entire lifecycle system. The Service Lifecycle consists of five components. Each volume of the ITIL V3 core books describes one of these components:

- Service Strategy

- Service Design

- Service Transition

- Service Operation

- Continual Service Improvement

Service Strategy is the axis of the Service Lifecycle that defines all other phases; it is the phase of policymaking and objectives. The phases Service Design, Service Transition, and Service Operation implement this strategy, their continual theme is adjustment and change. The Continual Service Improvement phase stands for learning and improving, and embraces all cycle phases. This phase initiates improvement programs and projects, and prioritizes them based on the strategic objectives of the organization. Each phase is run by a system of processes, activities and functions that describe how things should be done. The subsystems of the five phases are interrelated and most processes have overlap into another phase.

Service Strategy is the axis of the Service Lifecycle that defines all other phases; it is the phase of policymaking and objectives. The phases Service Design, Service Transition, and Service Operation implement this strategy, their continual theme is adjustment and change. The Continual Service Improvement phase stands for learning and improving, and embraces all cycle phases. This phase initiates improvement programs and projects, and prioritizes them based on the strategic objectives of the organization. Each phase is run by a system of processes, activities and functions that describe how things should be done. The subsystems of the five phases are interrelated and most processes have overlap into another phase.

What is MOF?

First released in 1999, Microsoft Operations Framework (MOF) is Microsoft’s structured approach to helping its customers achieve operational excellence across the entire IT service lifecycle. MOF was originally created to give IT professionals the knowledge and processes required to align their work in managing Microsoft platforms cost-effectively and to achieve high reliability and security. The new version, MOF 4.0, was built to respond to the new challenges for IT: demonstrating IT’s business value, responding to regulatory requirements and improving organizational capability. It also integrates best practices from Microsoft Solutions Framework (MSF).

MOF - IT Service Lifecycle

The IT service lifecycle describes the life of an IT service, from planning and optimizing the IT service and aligning it with the business strategy, through the design and delivery of the IT service in conformance with customer requirements, to its ongoing operation and support, delivering it to the user community. Underlying all of this is a foundation of IT governance, risk management, compliance, team organization, and change management. The IT service lifecycle of MOF is composed of three ongoing phases and one foundational layer that operates throughout all of the other phases:

- Plan phase: plan and optimize an IT service strategy in order to support business goals and objectives.

- Deliver phase: ensure that IT services are developed effectively, deployed successfully, and ready for Operations.

- Operate phase: ensure that IT services are operated, maintained, and supported in a way that meets business needs and expectations.

- Manage layer: the foundation of the IT service lifecycle. This layer is concerned with IT governance, risk, compliance, roles and responsibilities, change management, and configuration. Processes in this layer apply to all phases of the lifecycle.

Main Components

Each phase of the IT service lifecycle contains Service Management Functions (SMFs) that define and structure the processes, people, and activities required to align IT services to the requirements of the business. The SMFs are grouped together in phases that mirror the IT service lifecycle. Each SMF is anchored within a lifecycle phase and contains a unique set of goals and outcomes supporting the objectives of that phase. Each SMF has three to six key processes. Each SMF process has one to six key activities. For each phase in the lifecycle, Management Reviews (MRs) serve to bring together information and people to determine the status of IT services and to establish readiness to move forward in the lifecycle. MRs are internal controls that provide management validation checks, ensuring that goals are being achieved in an appropriate fashion, and that business value is considered throughout the IT service lifecycle.

In terms of the approach, both frameworks use a lifecycle structure at the highest level of design. Furthermore, both use processes and functions, although the emphasis differs significantly; ITIL describes many components in terms of processes and activities, with only a few functions, while MOF is almost entirely based on Service Management Functions. This difference is not as severe as it looks at first hand, since ITIL uses the term “process” for many components that actually are functions.

ITIL follows a phased approach in the lifecycle, and most of the components described in one phase also apply, to a greater or lesser extent, to other phases. The control of the MOF lifecycle is much more discrete, using specific milestones that mark the progress through the various stages in the lifecycle. MOF components that apply to more than one of these three lifecycle phases are separated from the lifecycle phases and described in an underlying Management Layer. Both frameworks are best characterized as “practice frameworks” and not “process frameworks.” The main difference is that ITIL focuses more on the “what,” and MOF covers both the “what” and the “how.”

The modeling techniques of ITIL and MOF are not that much different at first sight: both frameworks use extensive text descriptions, supported by flowcharts and schemes. ITIL documents its best practices by presenting processes, activities, and functions per phase of its lifecycle. MOF components have a rigid structure: each SMF has key processes, each process has key activities, and documentation on SMFs and MRs is structured in a very concise format, covering inputs, outputs, key questions, and best practices for each component. This rigid structure supports consistency throughout the framework and supports the user in applying a selection of MOF components for the most urgent local problems. The activation and implementation of ITIL and MOF are not really part of the framework documentation. ITIL has been advocating the “Adopt and Adapt” approach. Supporting structures like organizational roles and skills are described for each phase, but implementation guidance is not documented. MOF, like ITIL, offers best practice guidance that can be followed in full but also in part, for addressing a subset of local problems. Both frameworks speak of “guidance,” leaving the actual decisions on how to apply it to the practitioner.

Support structures for ITIL are not really part of the core documents: although a huge range of products claim compatibility with ITIL, and several unofficial accreditation systems exist in the field, the core books stay far from commercial products and from product certification, due to a desire to remain vendor-neutral. MOF compatibility, on the other hand, is substantially established. Microsoft aligns a broad set of tools from its platform with the MOF framework. And although MOF is not exclusively applicable for these Microsoft management products, the documentation at Microsoft’s TechNet website provides detailed information on the use of specific products from the Microsoft platform.

Differences

Although ITIL and MOF share many values, the two frameworks also show some significant differences.

- Cost: ITIL is available in 5 core books that are sold through various channels, but MOF is available on the internet for free. As a consequence, ITIL copyright is highly protected, where Microsoft made MOF content available under the Creative Commons Attribution License, which makes it freely available for commercial reuse.

- Development: in the latest versions, both ITIL and MOF spent considerable energy on documenting the development of new services and the adjustment of existing services. In addition, ITIL is constantly reviewed via the Change Control Log, where issues and improvements are suggested and then reviewed by a panel of experts who sit on the Change Advisory Board. The ITIL Service Design phase concentrates on service design principles, where the Deliver Phase in MOF concentrates on the actual development of services. The approach taken in MOF is heavily based on project management principles, emphasizing the project nature of this lifecycle phase.

- Reporting: ITIL has a specific entity that describes Reporting, in the Continual Service Improvement phase, where MOF has integrated reporting as a standard activity in SMFs.

- Call handling: ITIL V2 showed a combined handling of incidents and service requests in one process, but in ITIL V3 incident restoration and service request fulfillment were turned into two separately treated practices. MOF on the other hand stays much closer to the ITIL V2 practice, combining several customer requests in one activity flow, for incident restoration requests, information requests, service fulfillment requests, and new service requests. If the request involves a new or non-standard service, a separate change process can be triggered.

- Lifecycle construction: Most elements of ITIL are documented in one and only one of the five core books, but it is then explained they actually cover various phases of the ITIL lifecycle. ITIL uses five elements for its lifecycle: Strategy, Design, Transition, Operation, and Continual Improvement, which brings it close to the PDCA model. MOF’s lifecycle comprises only three phases: Plan, Deliver, Operate, with one underlying layer covering the components that apply to all lifecycle phases. As a consequence, a number of practices are applied all over the MOF lifecycle, but in ITIL these are mostly described in one or a few lifecycle phases. As an example, risk management is part of the Manage layer in MOF, but in ITIL it is mainly restricted to Strategy and Continual Improvement. The same goes for change and configuration management: throughout the MOF lifecycle but in ITIL these are concentrated in the Transition phase.

- Organization: ITIL describes roles and organizational structures in each lifecycle phase. MOF supports best practices for organizational structures by applying the Microsoft Solutions Framework (MSF) approach: throughout the MOF lifecycle responsibilities are documented and accountability is made explicit, and general rules are allocated to the underlying Manage layer.

- Governance: Both frameworks illustrate the difference between governance and management. ITIL describes governance theory and practice in the Strategy phase and in the CSI phase of its lifecycle, and refers to governance requirements in most other phases. MOF explicitly documents accountability and responsibility in all of its lifecycle phases and in the Manage layer, identifying decision makers and stakeholders, and addressing performance evaluation. MOF specifically addresses risk management and compliance in the Manage layer, supporting governance throughout the lifecycle. Explicit Management Reviews are used throughout the MOF framework as control mechanisms.

Positioning

This section will show how ITIL and MOF are positioned in the main paradigms, as discussed before. Appendix A shows the differences in more detail. Lifecycle On a high level, the lifecycles of ITIL and MOF appear to be rather similar, although the phases cannot be compared on a one-to-one basis.

There are some major differences between ITIL and MOF lifecycles:

- ITIL lifecycle phases contain processes, activities, and functions that also apply to other phases. In MOF, the SMFs that apply to more than one phase have been filtered out and grouped in the Manage layer, supporting the entire MOF lifecycle.

- MOF lifecycle phase transitions are managed through several Management Reviews (MRs). These MRs serve to determine the status of IT services and to establish readiness to move forward in the lifecycle. ITIL also uses a number of readiness tests for progress control in the lifecycle phases, but these are less explicit.

People - Process - Technology (PPT)

80 percent of unplanned downtime is caused by people and process issues, including poor change management practices, while the remainder is caused by technology failures and disasters. (Donna Scott, Gartner, Inc., 2003)

Both ITIL and MOF have a strong focus on processes. Both frameworks document the activities that need to be performed to cope with everyday problems and tasks in service organizations. Both frameworks also use the same formal definition of “process,” based on widely accepted ISO standards. However, in both cases the framework documentation is largely presented in a mix of process, people, and some technology, and therefore in the format of procedures, work instructions, and functions. This is for good reasons, because it addresses the actual perception of what people experience in their daily practice. Readers looking for “pure process descriptions” or process “models” will not find these in ITIL nor in MOF. And although ITIL uses the term “process” for many of its components, most of these components are actually functions. MOF uses the term Service Management Function throughout the framework.

Organizational structures are documented quite differently in both frameworks. Individual ITIL roles and MOF roles show some overlap, but both frameworks contain a long list of unique roles. This is largely based on the difference in viewpoint: ITIL works from its practices towards a detailed roles spectrum, and MOF works from a number of basic accountabilities: Support, Operations, Service, Compliance, Architecture, Solutions, and Management. MOF applies the MSF framework as a reference system for these organizational structures, supporting the performance of the organization. In larger organizations the MOF roles can be refined into more detailed structures, but in most organizations the roles are sufficient. The Team SMF of MOF is explicitly focused on the management of IT staff.

Technology is only covered at an abstract level in ITIL: the framework stays far from commercial products and only describes some basic requirements. MOF on the other hand is deeply interwoven with technology solutions. Although MOF has been defined in such a way that it is not technology-specific, the Microsoft technology platform highly aligns with the practices documented in MOF. The MOF website is embedded in the rest of the TechNet documentation on Microsoft products. STO and SoD, in SAME

Strategic levels are covered in both frameworks. ITIL documents its best practices on long-term decisions in the Strategy phase. MOF does the very same in the Plan phase, and supports this in the Manage layer. Tactical levels are covered in a similar way: ITIL concentrates these in the Service Design and CSI phase, and MOF describes its tactical guidance in the Deliver phase, in the Manage layer and in the Operate phase (Problem Management). Operational levels are covered mainly in a single phase in both frameworks; ITIL has its Service Operation phase, and MOF has its Operate phase.

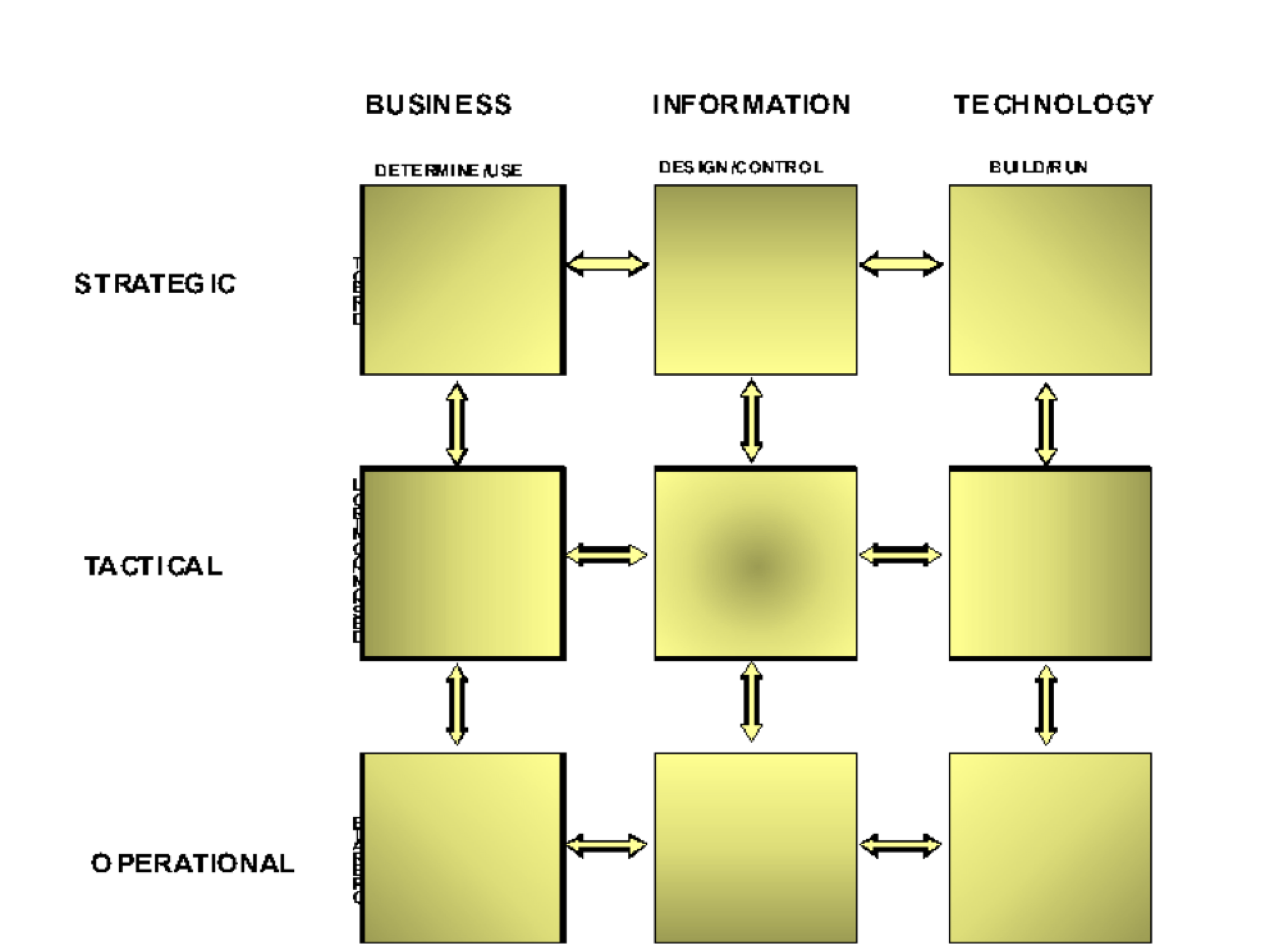

The ITIL lifecycle phases are positioned mainly in the Technology Management domain, emphasizing that ITIL explicitly supports the organizations that deliver IT services. The activities that relate to the specification of the service requirements and the management of enterprise data architectures are typically found in the middle column of the 3x3 SAME matrix.

This also applies to MOF. The MOF Plan phase is largely positioned at the Strategy level, but also concentrates on the Technology Management domain. The Deliver phase is positioned similarly, but then on tactical and operational levels. The Operate phase clearly works at the operational level of the Technology Management domain, except for the very tactical practice of Problem Management. The Manage layer in MOF relates to all three management levels, but also concentrates at the Technology Management domain.

As a consequence, both frameworks require that elements from additional frameworks like TOGAF, ISO27001, CobiT, M_o_R®, BiSL, FSM, and MSP™, are applied for managing the rest of the overarching Information Support domain. Plan-Do-Check-Act (PDCA)

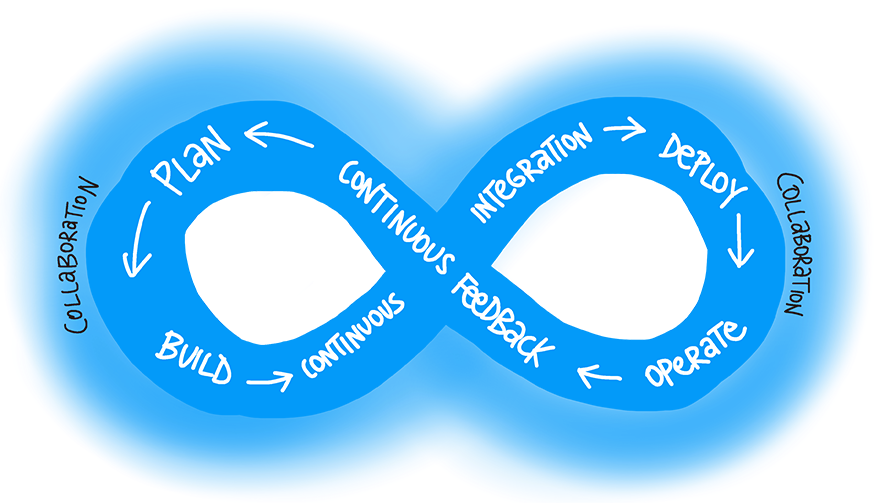

ITIL explicitly follows Demings PDCA management improvement cycle, for implementing the CSI phase, for implementing the Information Security function in the Service Design phase and for the continual improvement of services, processes, and functions throughout the service lifecycle.

MOF does not explicitly list PDCA as a mechanism, but it follows its principles throughout the lifecycle, in all SMFs. Plan-do-check is elementary to the implementation of all SMFs, and various check-act points can be found in the very explicit Management Reviews throughout the MOF framework.

Terminology and Definitions

The “ITSM language” is quite consistent between both frameworks, with only minor differences. For example, where ITIL uses the term Change Schedule, MOF uses Forward Schedule of Change. Such small differences shouldn’t be a problem in practice. Of course both frameworks use some typical terminology that illustrates some of their unique characteristics: • The ITIL core terms utility and warranty, fit for purpose and fit for use, are not used in MOF, and neither are terms like service package – although MOF speaks of “packaged products” in general terms. • Likewise, some explicit MOF terms, like customer service management, stabilize, and issue-tracking, are not used—or are used differently—in ITIL.

The interrelationship of people, process, and technology. A widely accepted paradigm for defining the core focus areas in managing organizational improvement is Process - People - Technology (PPT). When using IT Service Management frameworks for organizational improvement, each of these three areas should be addressed. An important consequence of applying this paradigm is the separation of functions from processes. A process is a structured set of activities designed to accomplish a defined objective in a measurable and repeatable manner, transforming inputs into outputs. Processes result in a goal-oriented change, and utilize feedback for self-enhancing and self-corrective actions. MOF defines a process as interrelated tasks that, taken together, produce a defined, desired result. A function is an organizational capability, a combination of people, processes (activities), and technology, specialized in fulfilling a specific type of work, and responsible for specific end results. Functions use processes. MOF doesn’t offer a definition for function alone; rather, it defines the term service management function (SMF) as a core part of MOF that provides operational guidance for Microsoft technologies employed in computing environments for information technology applications. SMFs help organizations to achieve mission-critical system reliability, availability, supportability, and manageability of IT solutions.

The interrelationship of people, process, and technology. A widely accepted paradigm for defining the core focus areas in managing organizational improvement is Process - People - Technology (PPT). When using IT Service Management frameworks for organizational improvement, each of these three areas should be addressed. An important consequence of applying this paradigm is the separation of functions from processes. A process is a structured set of activities designed to accomplish a defined objective in a measurable and repeatable manner, transforming inputs into outputs. Processes result in a goal-oriented change, and utilize feedback for self-enhancing and self-corrective actions. MOF defines a process as interrelated tasks that, taken together, produce a defined, desired result. A function is an organizational capability, a combination of people, processes (activities), and technology, specialized in fulfilling a specific type of work, and responsible for specific end results. Functions use processes. MOF doesn’t offer a definition for function alone; rather, it defines the term service management function (SMF) as a core part of MOF that provides operational guidance for Microsoft technologies employed in computing environments for information technology applications. SMFs help organizations to achieve mission-critical system reliability, availability, supportability, and manageability of IT solutions.

The interrelationship of strategy, tactics, and operations. A important and widely applied approach to the management of organizations is the paradigm of Strategy - Tactics - Operations. The interrelationship of strategy, tactics, and operations A second important and widely applied approach to the management of organizations is the paradigm of Strategy - Tactics - Operations. At a strategic level an organization manages its long-term objectives in terms of identity, value, relations, choices and preconditions. At the tactical level these objectives are translated into specific goals that are directed and controlled. At the operational level these goals are then translated into action plans and realized.

The interrelationship of strategy, tactics, and operations. A important and widely applied approach to the management of organizations is the paradigm of Strategy - Tactics - Operations. The interrelationship of strategy, tactics, and operations A second important and widely applied approach to the management of organizations is the paradigm of Strategy - Tactics - Operations. At a strategic level an organization manages its long-term objectives in terms of identity, value, relations, choices and preconditions. At the tactical level these objectives are translated into specific goals that are directed and controlled. At the operational level these goals are then translated into action plans and realized.

Information processing systems have one and only one goal; to support the primary business processes of the customer organization. Applying the widely accepted control mechanism of Separation of Duties (SoD), also known as Separation of Control (SoC), we find a domain where information system functionality is specified (Information Management), and another domain where these specifications are realized (Technology Management). The output realized by the Technology Management domain is the operational IT service used by the customer in the Business domain.

Information processing systems have one and only one goal; to support the primary business processes of the customer organization. Applying the widely accepted control mechanism of Separation of Duties (SoD), also known as Separation of Control (SoC), we find a domain where information system functionality is specified (Information Management), and another domain where these specifications are realized (Technology Management). The output realized by the Technology Management domain is the operational IT service used by the customer in the Business domain.

The combination of STO and SoD delivers a very practical blueprint of responsibility domains for the management of organizations; the Strategic Alignment Model Enhanced  This blueprint provides excellent services in comparing the positions of management frameworks, and in supporting discussions on the allocation of responsibilities—for example, in discussions on outsourcing. It is used by a growing number of universities, consultants and practitioners.

This blueprint provides excellent services in comparing the positions of management frameworks, and in supporting discussions on the allocation of responsibilities—for example, in discussions on outsourcing. It is used by a growing number of universities, consultants and practitioners.

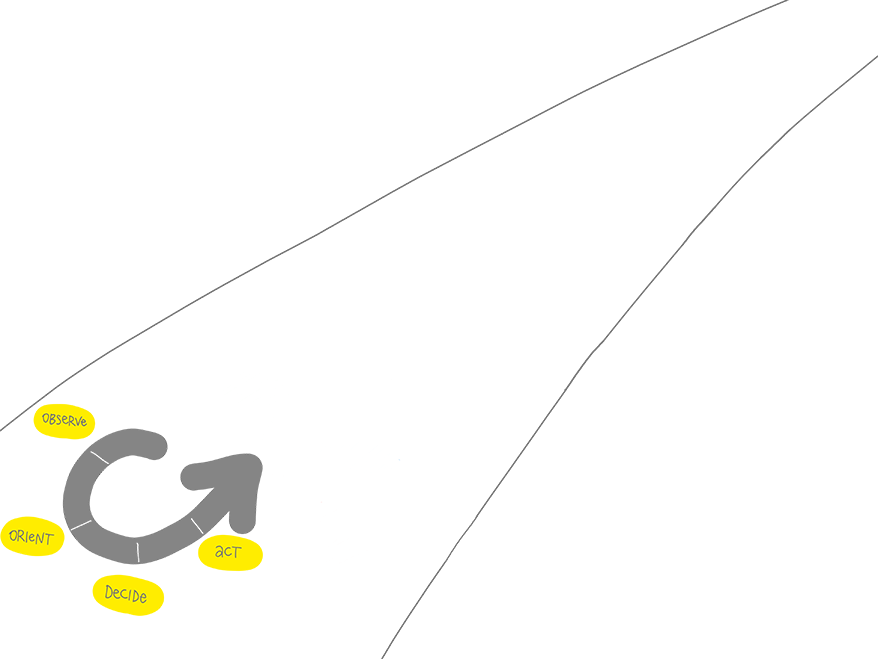

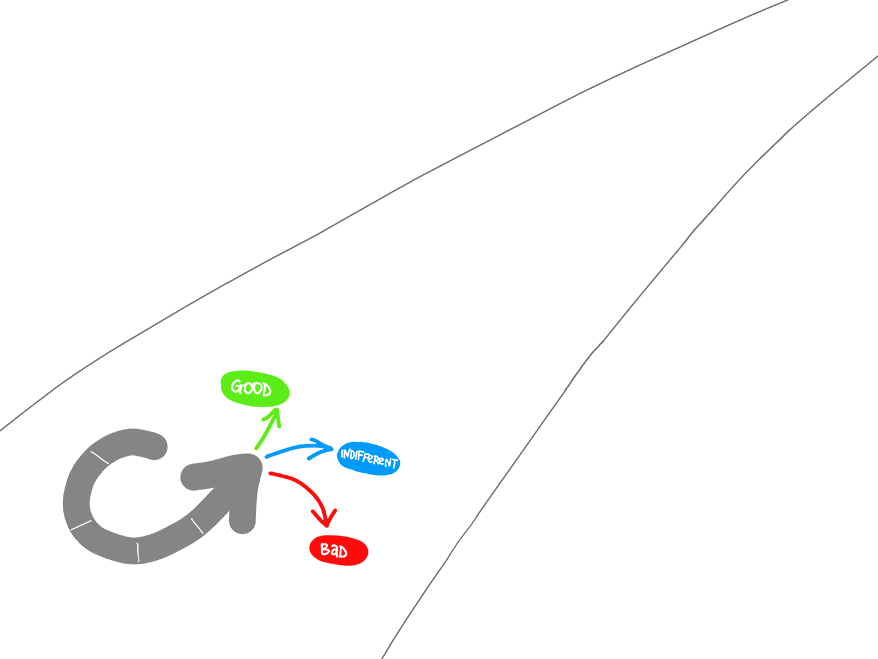

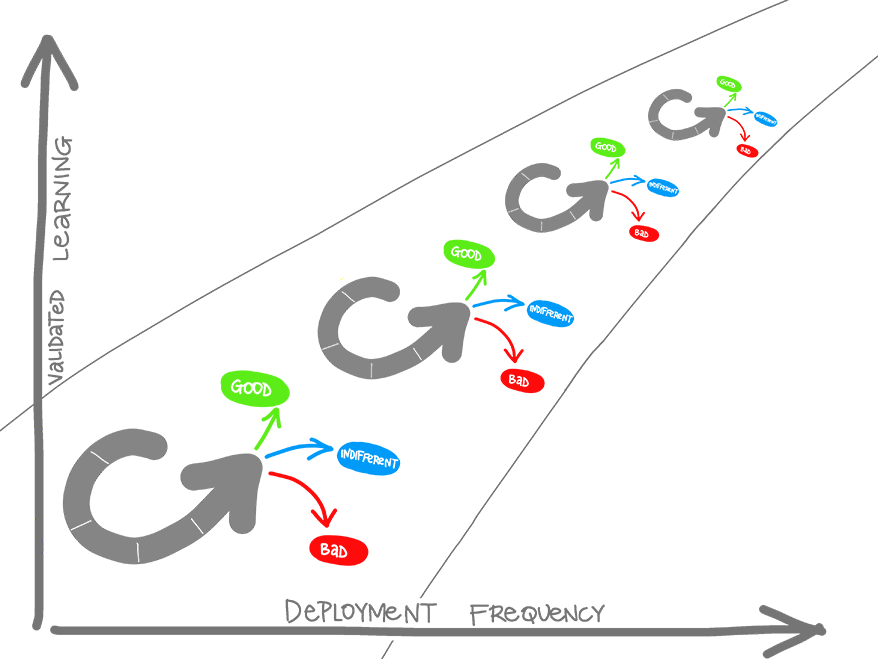

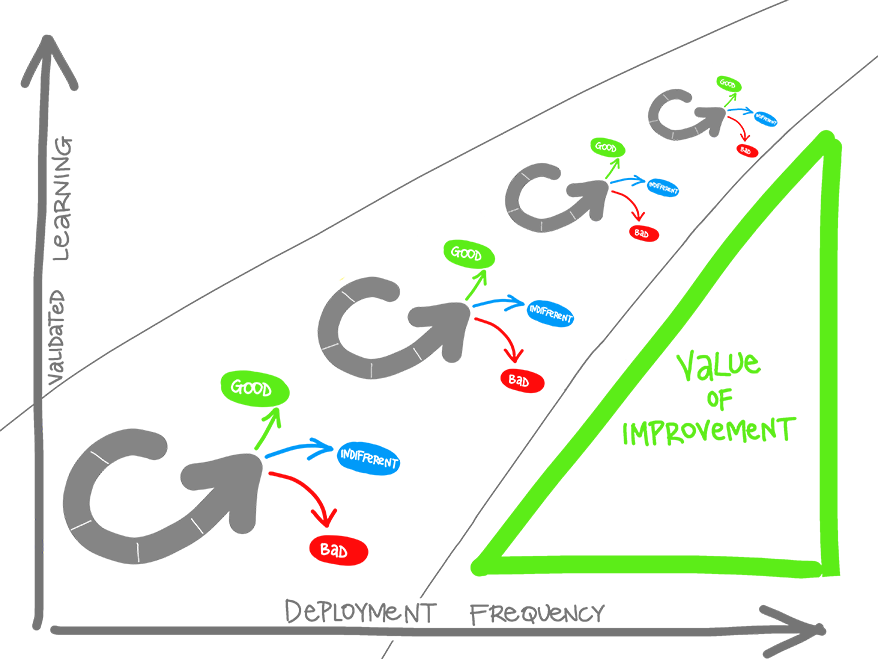

Since IT services are recognized as strategic business assets, organizations need to continually improve the contribution of IT services to business functions, in terms of better results at lower cost. A widely accepted approach to continual improvement is Deming’s Plan-Do-Check-Act Management Cycle.This implies a repeating pattern of improvement efforts with varying levels of intensity. The cycle is often pictured, rolling up a slope of quality improvement, touching it in the order of P-D-C-A, with quality assurance preventing it from rolling back down.

Since IT services are recognized as strategic business assets, organizations need to continually improve the contribution of IT services to business functions, in terms of better results at lower cost. A widely accepted approach to continual improvement is Deming’s Plan-Do-Check-Act Management Cycle.This implies a repeating pattern of improvement efforts with varying levels of intensity. The cycle is often pictured, rolling up a slope of quality improvement, touching it in the order of P-D-C-A, with quality assurance preventing it from rolling back down.

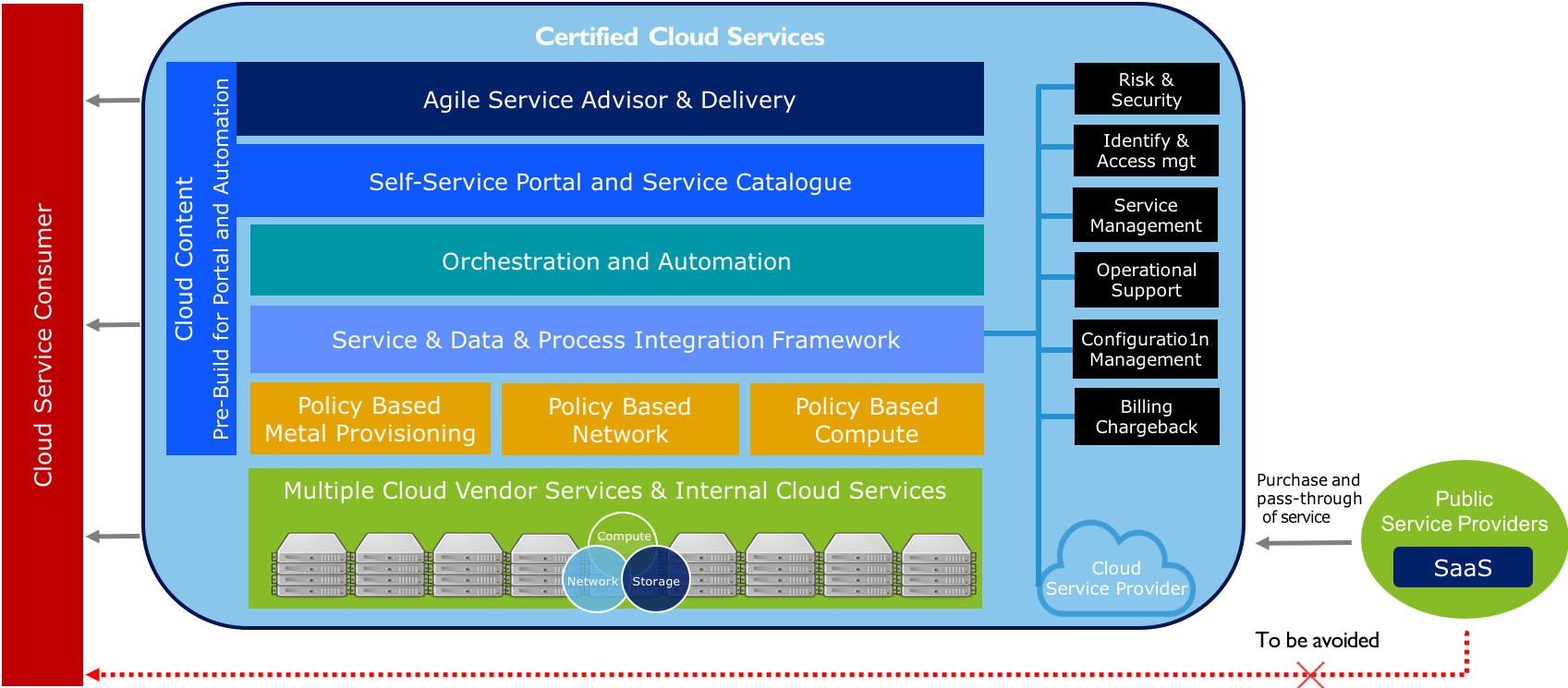

The framework above organizes governance into six areas of focus, which span the entire organization. We describe these areas of focus as Perspectives. Perspectives each encompass distinct responsibilities owned or managed by functionally related stakeholders. There are three perspectives addressing Business Stakeholders and three perspectives addressing Technology Stakeholders Each of the six Perspectives that make up the Cloud Framework as is described below. By clicking on the area’s in the image you can find more detailed information.

Helps stakeholders understand how to update staff skills and organizational processes involved in business support capabilities, to optimize business value with new services adoption.

Common Roles: Human Resources; Staffing; People Managers.

The Business Perspective is focused on ensuring that IT is aligned with business needs and that IT investments can be traced to demonstrable business results. Engage stakeholders within the Business Perspective to create a strong business case for example cloud adoption, prioritize initiatives, and ensure that there is a strong alignment between your organisation business strategies and goals and IT strategies and goals

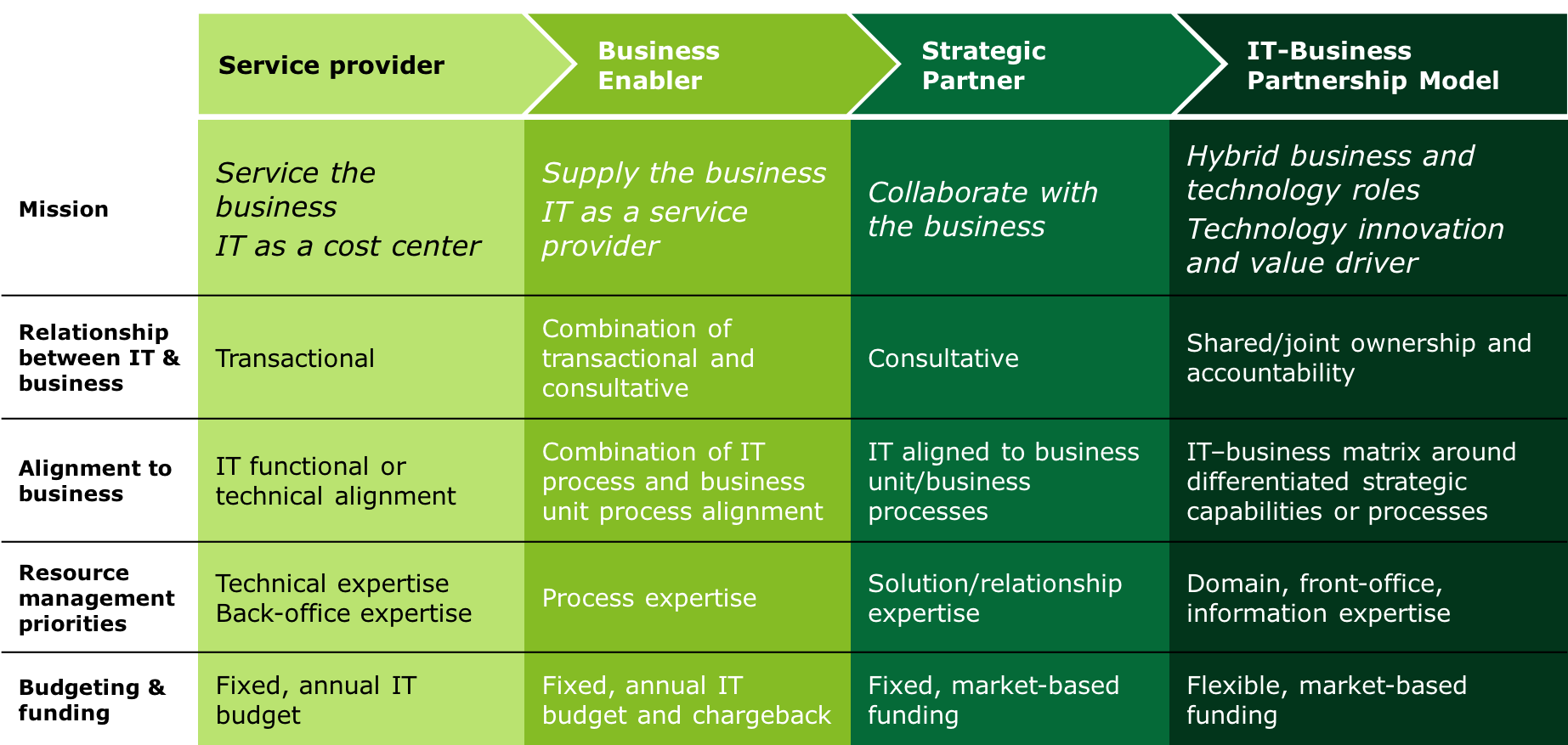

Path to Partnership

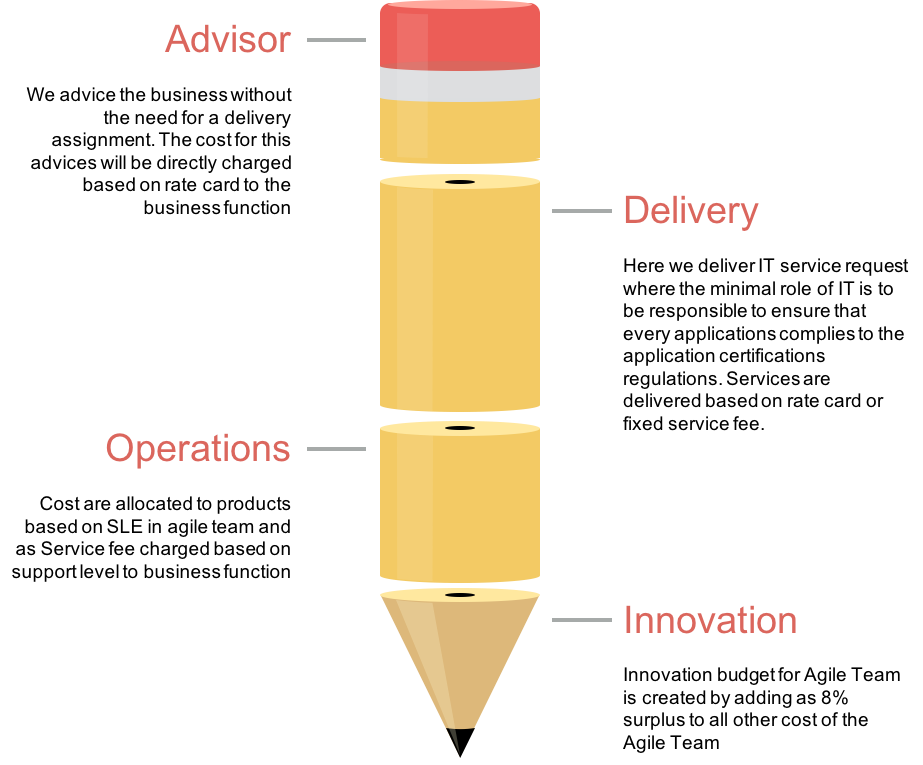

You should look critical towards your IT organisation and evaluate what kind of role you would like to play. The image below explains the path to partnership, which most IT organisation strife for.

Addresses the organisation capability to plan, allocate, and manage the budget for IT expenses given changes introduced with a services consumption model. A common budgeting change involves moving from capital asset expenditures and maintenance to consumption-based pricing. The move requires new skills to capture information and new processes to allocate cloud asset costs that accommodate consumption-based pricing models. You want to ensure that your organization maximizes the value of its cloud investments. Charge-back models are another common change with cloud adoption. Cloud services provide options to create very granular charge-back models. You will be able to track consumption with new details, which creates new opportunities to associate costs with results.

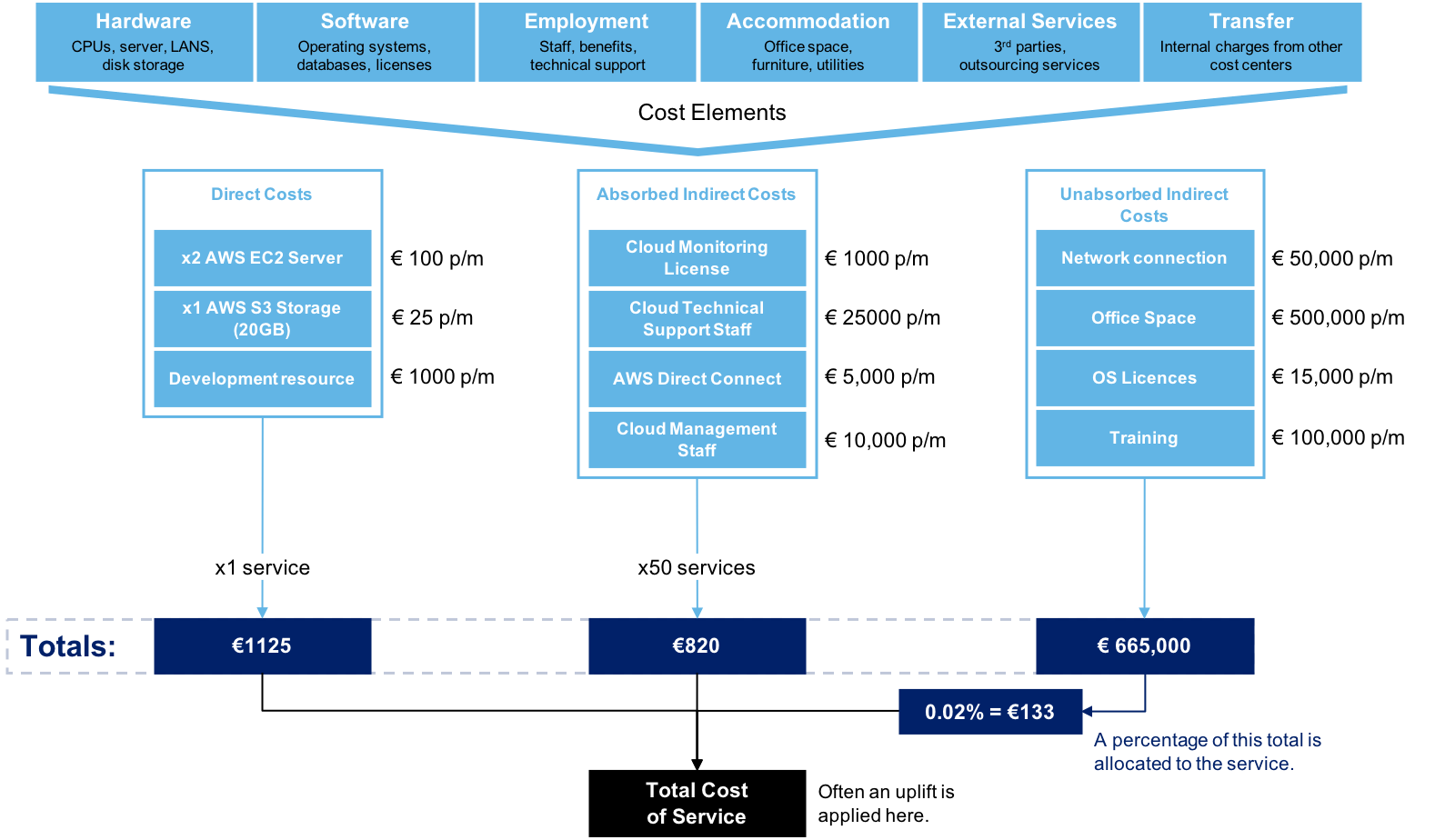

Cost for Services

It can be difficult to calculate the cost of a service. In the picture shows a model to help you make this calculation.

- Direct costs are clearly attributable to a single service

- Absorbed Indirect costs are those incurred on behalf of all or a number of services. These have to be apportioned to all or the number of services in a fair manner.

- Un-absorbed Indirect costs cannot be apportioned to a set of services and have to be recovered from all services in as fair away as is possible

Cost Elements for Agile Teams

When using cloud you probably will introduce agile teams. The advantage of Agile teams is that there cost are predictable.

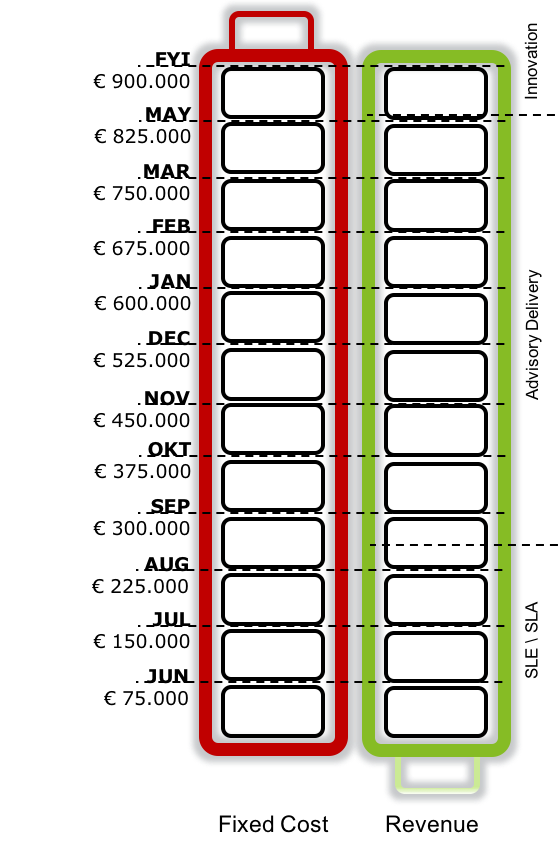

Cost Control for Agile Teams

As stated the one of the advantages of Agile Teams is cost control, the burn rate is stable so if you get a stable income the finacial out come is predicatable. Let’s take a look at an example using a battery cost model.

An agile team consist of 6 team members. The members have all the same rate (€70 incl. 8% innovation + overhead) as a result the average month cost are €75.000. The total year cost will be €900.000 and therefore the team can spend €72.000 on innovation (4 weeks).

If we take the assumption that the agile team in average will spend 32% of there time on operations in form of SLE/SLA (€288.000) their target is to spend 60% on Advisory and Delivery (€540.000)

Using a battery model we can evaluate if the agile team will break even at the end of FYI.

Compute resources are always charged directly to the functions and therefor don’t need to be part of the battery model

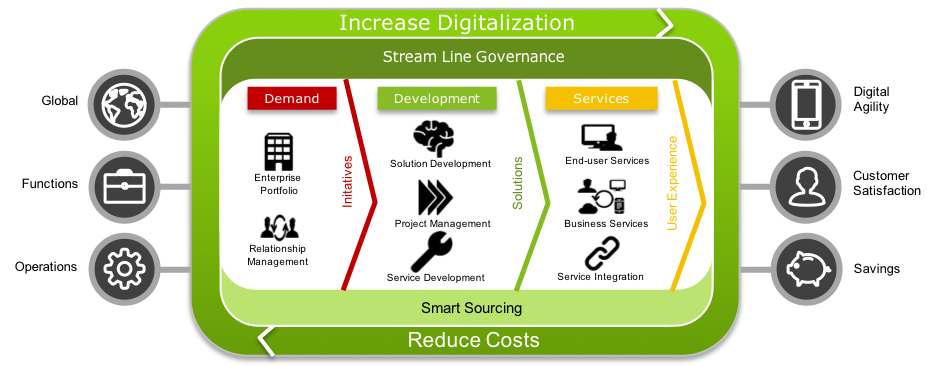

IT services provide efficiencies that reduce the need to maintain applications, enabling IT to focus on business alignment.

This alignment requires new skills and both new and selectively modified processes between IT and other business and operational areas. IT may need new skills to gather business requirements and new processes to solve business challenges. The business has increasing requirements on IT, to be faster and flexible when delivering solutions which needs to be 100% available and supported 7x24x365. There is a need for utility based cost model with full transparency and cost insight up-front. IT should be able to support them with Technology advice and implementation support. Predefined “checked-boxed” self-service approach is expected for standard services like: Security, Data & Service Integration, Technology Preference, Certification demands and Operations as a Service.

This alignment requires new skills and both new and selectively modified processes between IT and other business and operational areas. IT may need new skills to gather business requirements and new processes to solve business challenges. The business has increasing requirements on IT, to be faster and flexible when delivering solutions which needs to be 100% available and supported 7x24x365. There is a need for utility based cost model with full transparency and cost insight up-front. IT should be able to support them with Technology advice and implementation support. Predefined “checked-boxed” self-service approach is expected for standard services like: Security, Data & Service Integration, Technology Preference, Certification demands and Operations as a Service.

The strategy is to deliver a clear and concise agile process for IT services supported by Multi Vendor Cloud Technology. The experience of consuming cloud services for the business will be identical / seamless through the usage of a predefined check-boxed Cloud Portal. By working together as one agile team we will deliver business value by shortening development time, increasing productivity and increasing capacity. Determined for 100% availability, reliability and secured by design increasing our effectiveness in delivering cloud services to our partners and practitioners.

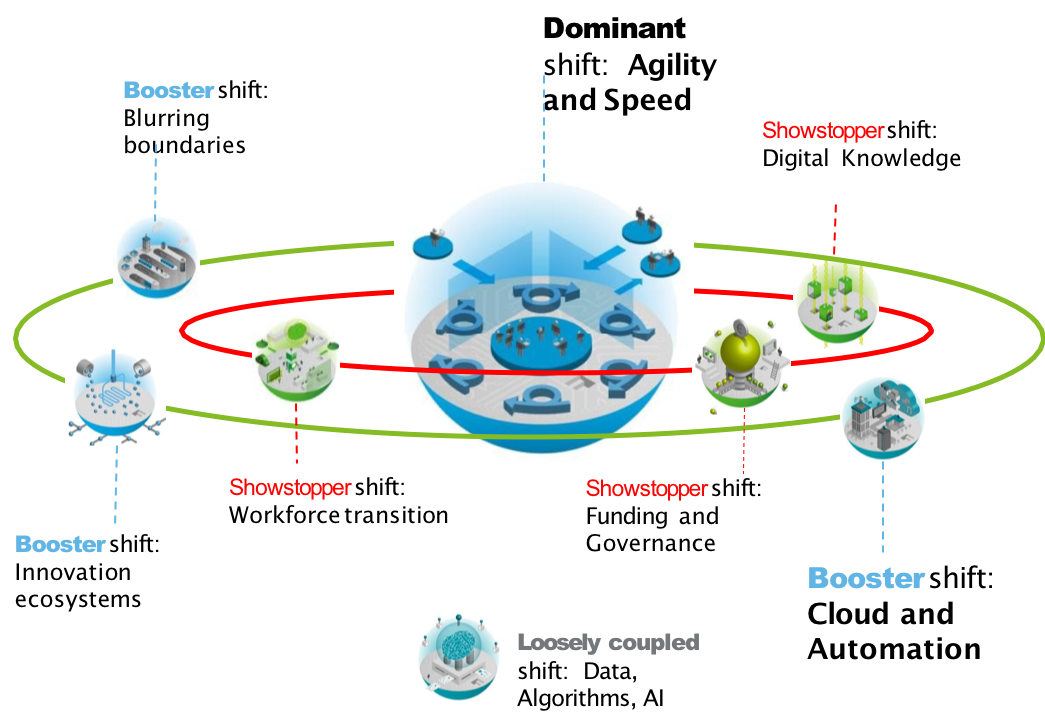

Agility and Speed

A major shift within IT is the demand for Agility and Speed which is stimulated with cloud services becoming general available by credit card.

Strategy Contributions

- Increase Agility and Speed by setting up a Agile team

- Support the shift towards Cloud and Automation

Pre-requisites to make IT successful

- Accelerate Workforce transition by adding jobs with new skills

- Increase Digital knowledge / DNA by providing training and adjusting the organisational IT culture and environment

- Adjust funding and governance by transforming to digital leadership/governance, culture of innovation and funding model adjustments

IT as Cloud Provider and Broker

Cloud Consumer

The cloud consumer is the ultimate stakeholder that the cloud computing service is created to support. A cloud consumer represents a person or organization that maintains a business relationship with, and uses the service from, a cloud provider. A cloud consumer browses the service catalog from a cloud provider, requests the appropriate service, sets up service contracts with the cloud provider, and uses the service. The cloud consumer may be billed for the service provisioned, and needs to arrange payments accordingly.

Cloud Provider

A cloud provider can be a person, an organization, or an entity responsible for making a service available to cloud consumers. A cloud provider builds the requested software/platform/ infrastructure services, manages the technical infrastructure required for providing the services, provisions the services at agreed-upon service levels, and protects the security and privacy of the services. Cloud providers undertake different tasks for the provisioning of the various service models

Cloud Broker

As cloud computing evolves, the integration of cloud services can be too complex for cloud consumers to manage. A cloud consumer may request cloud services from a cloud broker, instead of contacting a cloud provider directly. A cloud broker is an entity that manages the use, performance, and delivery of cloud services and negotiates relationships between cloud providers and cloud consumers.

In general, a cloud broker can provide services in three categories:

- Service Intermediation: A cloud broker enhances a given service by improving some specific capability and providing value-added services to cloud consumers. The improvement can be managing access to cloud services, identity management, performance reporting, enhanced security, etc.

- Service Aggregation: A cloud broker combines and integrates multiple services into one or more new services. The broker provides data integration and ensures the secure data movement between the cloud consumer and multiple cloud providers.

- Service Arbitrage: Service arbitrage is similar to service aggregation except that the services being aggregated are not fixed. Service arbitrage means a broker has the flexibility to choose services from multiple agencies. The cloud broker, for example, can use a credit-scoring service to measure and select an agency with the best score

Encompasses your organisation capability to measure the benefits received from their IT investments. For many organizations, this represents Total Cost of Ownership (TCO) or Return on Investment (ROI) calculations coupled with budget management.

Focuses on the organization capability to understand the business impact of preventable, strategic, and external risks to the organisation. For many, these risks stem from the impact of financial and technology constraints on agility. Organizations find that with a move to the cloud, many of these constraints are reduced or eliminated. Taking full advantage of this newfound agility requires teams to develop new skills to understand the competitive marketplace and potential disrupter, and to explore new processes for evaluating the business risks of such competitors.

Information security has long been built on the assumption that the internal network within a company is a safe area and has to be protected against threats from outside. In the digitalized world where everything is interconnected, the traditional company boundaries are more complex and therefore, information security has to be redefined accordingly.

Information security in the modern business world can be defined in the following three categories:

- Devices should contain as little confidential data as possible because they are prone to be lost, stolen or misused. All terminal devices should be protected by an intrusion prevention software, and all data in the devices should be encrypted. These actions can be thought as “vaccinations” against various threats i.e., not giving 100% protection but a good enough precaution that can stop the viruses spreading even further.

- Networks where the devices are used vary from completely open to closed networks. Most companies’ internal networks may still be insecure even when protected. Therefore, the traffic in the networks must be controlled and analysed to detect the anomalies as early as possible so that the possible damages can be stopped or minimized. Network protection needs both preventive and recovery actions coupled with the ability to react fast and professionally against any security hazard.

- Information storages containing the company information should be protected according to criticality of the information. All information should be classified, yet aiming for simplicity. For example, classifying the information as highly confidential, company confidential or public. This way the protection can be defined separately for each class, and the highest, and usually the most expensive protection mechanisms can be applied where it is truly needed while keeping the protection to fit-for-purpose level in the other levels.

On top of technical information security, a well-designed Identity and Access Management (IAM) is needed in order to prevent misuse of a (legitimate) identity that can lead to:

- grant access to confidential information to unwanted parties

- copy and use of the information for unauthorized/illicit purposes

- destroy the data or cause other harm to it

- modify the data to suit for own purposes

One of the most important security measures is to minimize the possibility of human errors that can pave the way for security violations. The following actions should be considered for prevention:

- Instructing and tutoring the users to prevent exposure for security threats caused by careless use. All users should be instructed to use safe passwords and safe storing of passwords as well as what to do if the terminal device is lost or they suspect a security violation to take place. These action are aimed to prevent the identity thefts.

- Appropriate definition of user rights to prevent unauthorized access or possibility to perform actions that exceed the access rights. For example, an employee should not have the rights to both create and approve the same chargeable invoice. These measures are aimed to prevent the misuse of an identity.

Information security is conducted in co-operation between the following three parties:

- Chief Information Security Officer (CISO) is responsible for planning and execution of the information security

- Business Management is responsible for ensuring the business continuity and approving of the acceptable risk levels

- Service Providers are responsible for information security operations

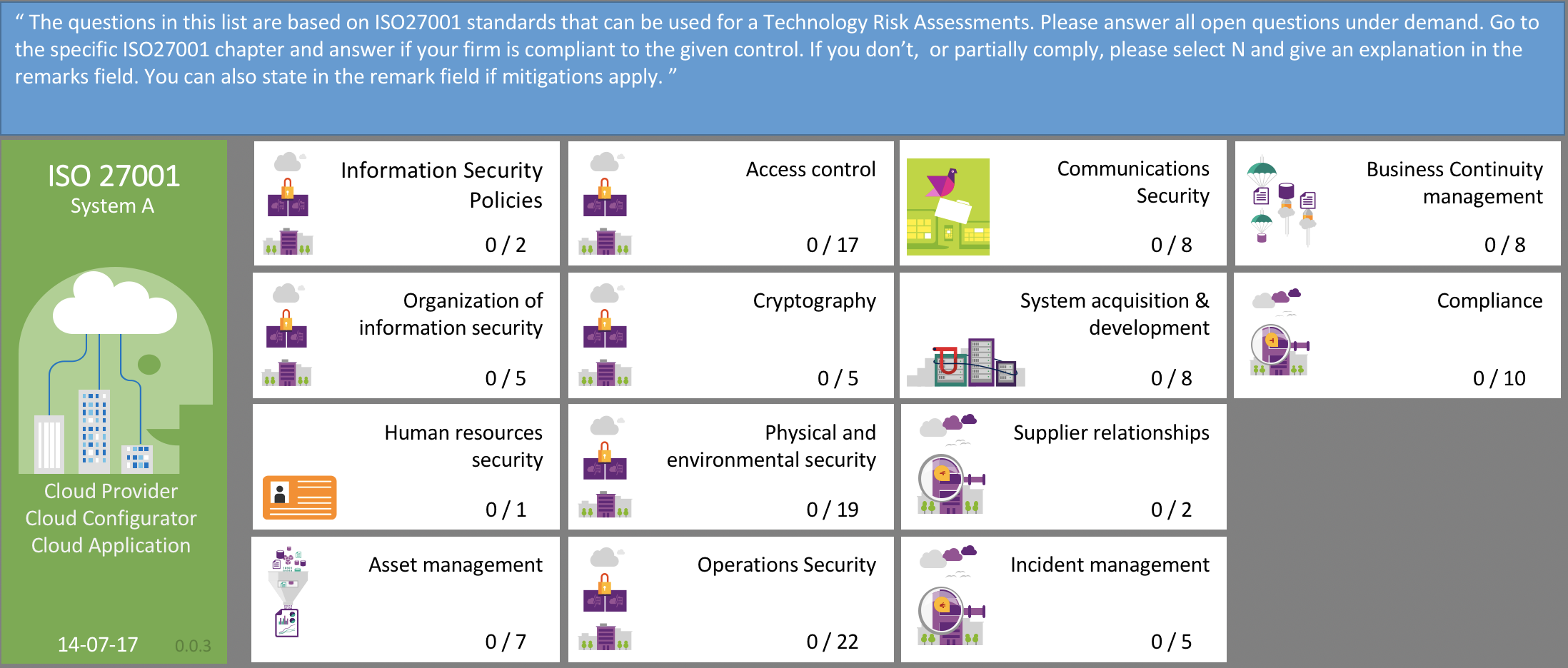

IT-related risk management must also be an integrated part of the companies overall management systems. Risk management means systematically recognizing and preparing for factors that cause uncertainty and threats to company objectives and operations. Since risks can never be entirely eliminated, management must define the companies acceptable risk level. Company management defines risk management policies, applicable methods, responsibilities and tasks for different parties as well as practices for monitoring and reporting. Business targets and uncertainty factors change over time, so risk management must also be treated as a continuous process. Below is the image of an excel you can use to make a ISO27001 based risk assessment, just click on it to open it.

Quality assurance keeps IT operations in line with standards and best practices, and ensures that the quality requirements of IT are met. IT processes must be described and they must aim to produce the best user experience. Quality assurance needs to be integrated into all IT processes and services. Quality assurance is not only about the systematic measurement of operations, processes, and services, but also their continuous development and overall business performance assurance. Additionally, it means maintaining constant focus on the business value created by IT.

Provides guidance for stakeholders responsible for talent development, training, and communications. Helps stakeholders understand how to update staff skills and organisational processes with cloud based competencies.

Common Roles: Business Managers; Finance Managers; Budget Owners; Strategy Stakeholders.

The Talent perspective covers organisational staff capability and change management functions required for efficient cloud adoption. Engage stakeholders within the People Perspective to evaluate organizational structures and roles, new skill and process requirements, and identify gaps. Performing an analysis of needs and gaps helps you to prioritize training, staffing, and organizational changes so that you can build an agile organization that is ready for effective cloud adoption. It also helps leadership communicate changes to the organization. The People Perspective supports development of an organization-wide change management strategy for successful cloud adoption

Addresses the organisation capability to project personnel needs and to attract and hire the talent necessary to support the organisations goals. Service adoption requires that the staffing teams in your organization acquire new skills and processes to ensure that they can forecast and staff based on your organizations needs. These teams need to develop the skills necessary to understand cloud technologies, and they may need to update processes for forecasting future staffing requirements.

When building you teams have a look at the role descriptions we made.

Addresses the organization capability to ensure employees have the knowledge and skills necessary to perform their roles and comply with organisational policies and requirements. Staff in your organization will need to frequently update the knowledge and skills required to implement and maintain cloud services. Training modalities may need to be revised so that the organization can embrace the speed of change and innovation. Trainers will need to develop new skills in training modalities and new processes for dealing with rapid change.

Focuses on the organisation capability to manage the effects and impacts of business, structural, and cultural change introduced with cloud adoption. Change management is central to successful cloud adoption. Clear communications, as always, are critical to ease change and reduce uncertainty that may be present for staff when introducing new ways of working. As a natural part of cloud adoption, teams will need to develop skills and processes to manage ongoing change.

Addresses our capability to ensure workers receive competitive compensation and benefits for what they bring,

Focuses on our capability to ensure the personal fulfilment of employees, their career opportunities and their financial security.

Provides guidance to stakeholders who support business processes with technology, and who are responsible for managing and measuring the resulting business outcomes. Helps stakeholders understand how to update staff skills and organizational processes necessary to ensure business governance in the cloud.

Common Roles: CIO; Program Managers; Project Managers; Enterprise Architects; Business Analysts; Portfolio Managers.

The Governance Perspective focuses on the skills and processes that are needed to align IT strategy and goals with your organisation business strategy and goals, to ensure your organization maximizes the business value of its IT investment and minimizes the business risks. This Perspective includes Program Management and Project Management capabilities that support governance processes for service adoption and ongoing operations.

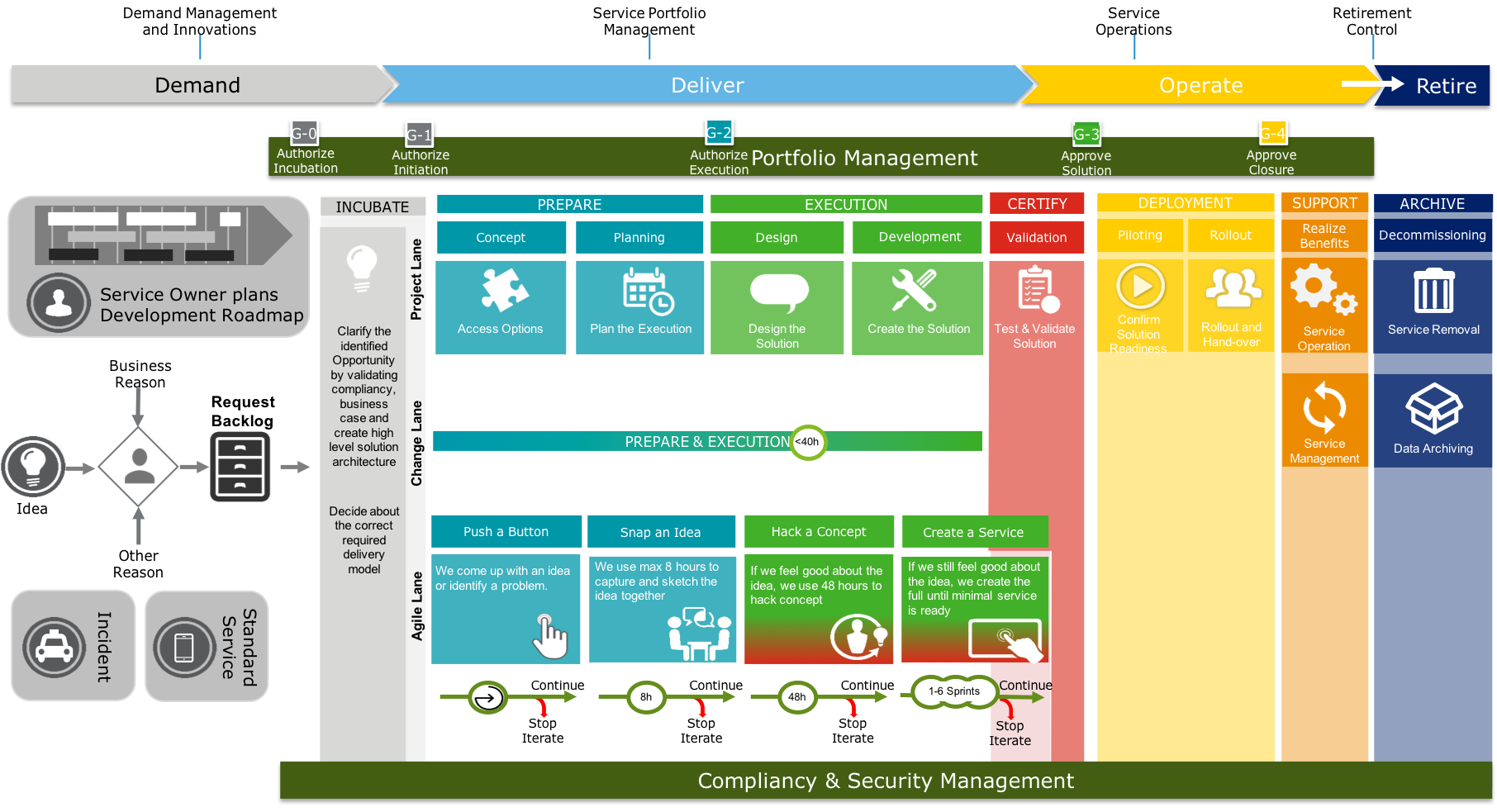

Service Life Cycle

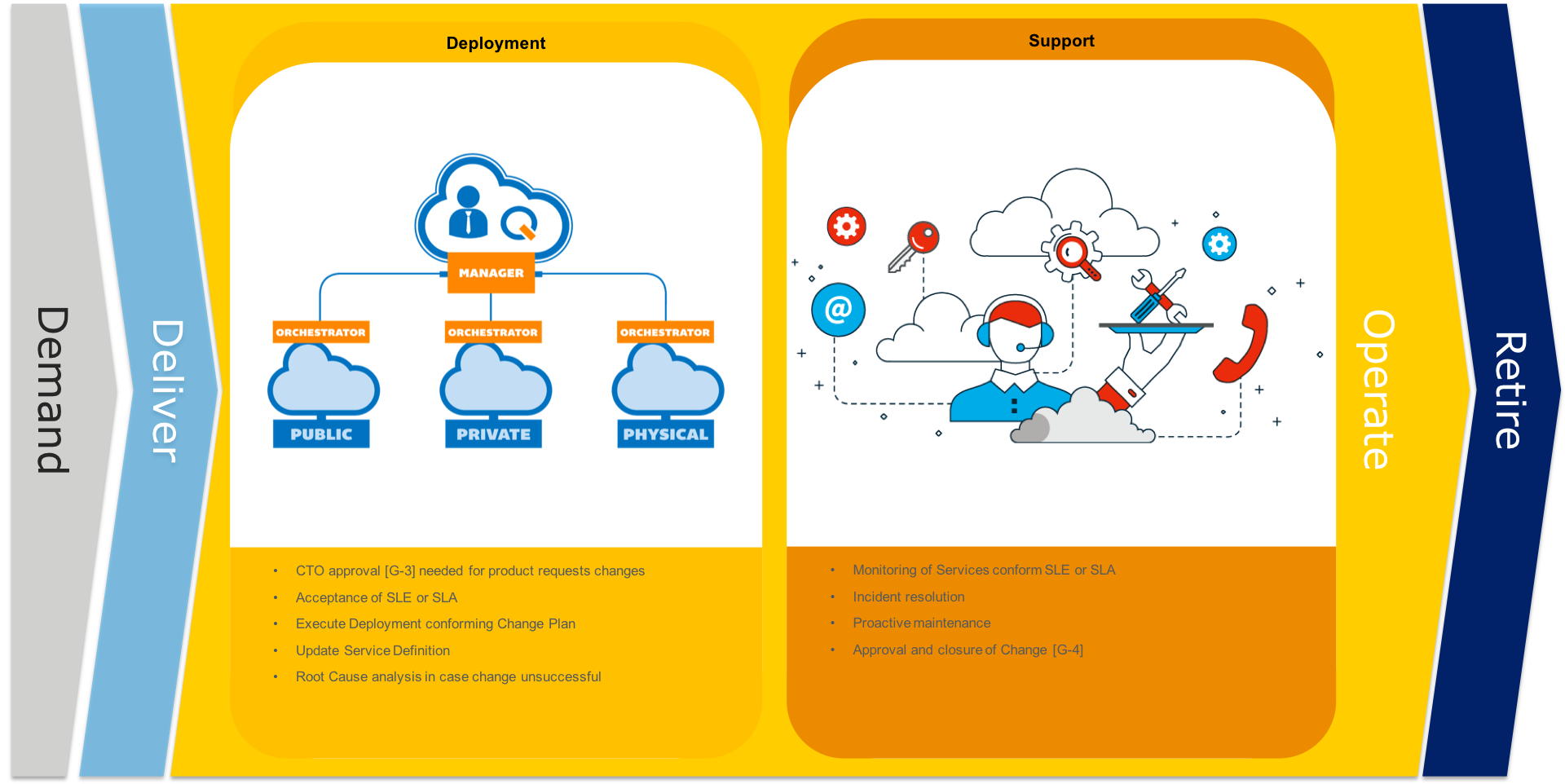

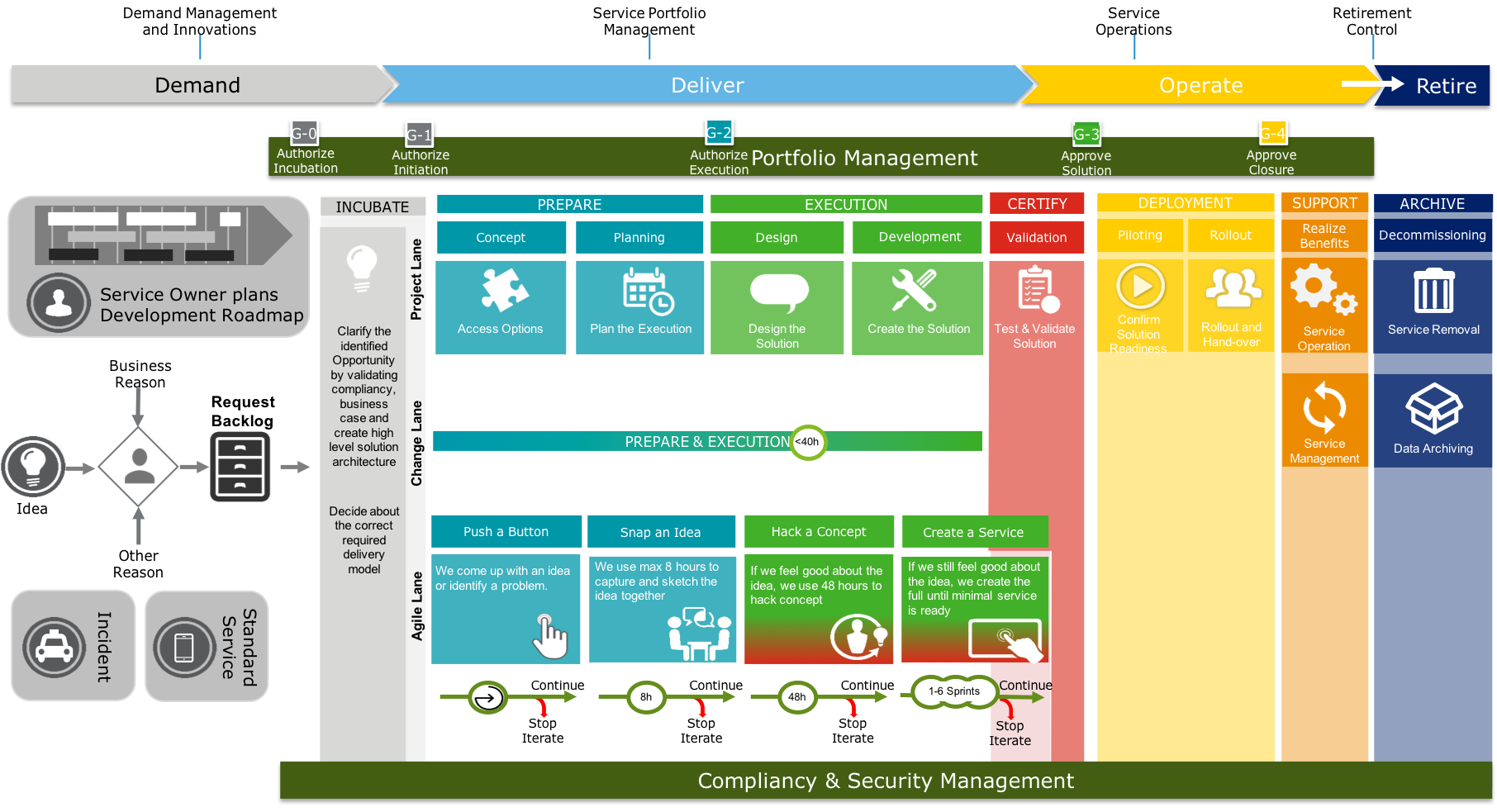

The overall service life cycle can be divided into 4 clear steps;

- Demand: ensure that the service requests of the business have an owner, are clearly defined, prioritized and approved

- Deliver: creates the services defined by the business, according to delivery method

- Operate: ensures that the services are operated within the agreed service level

- Retire: ensures the correct disposal of the services which are no longer required.

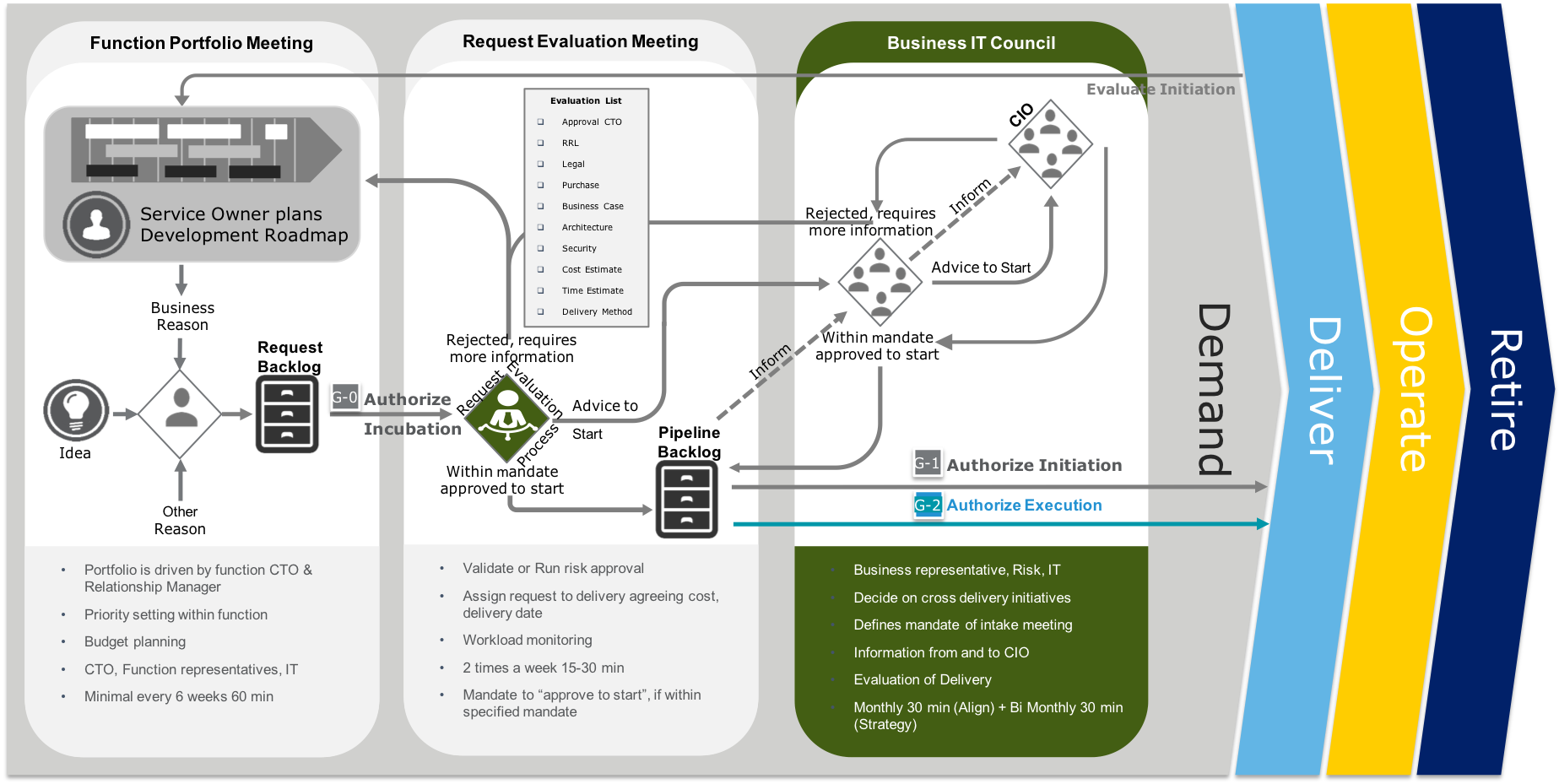

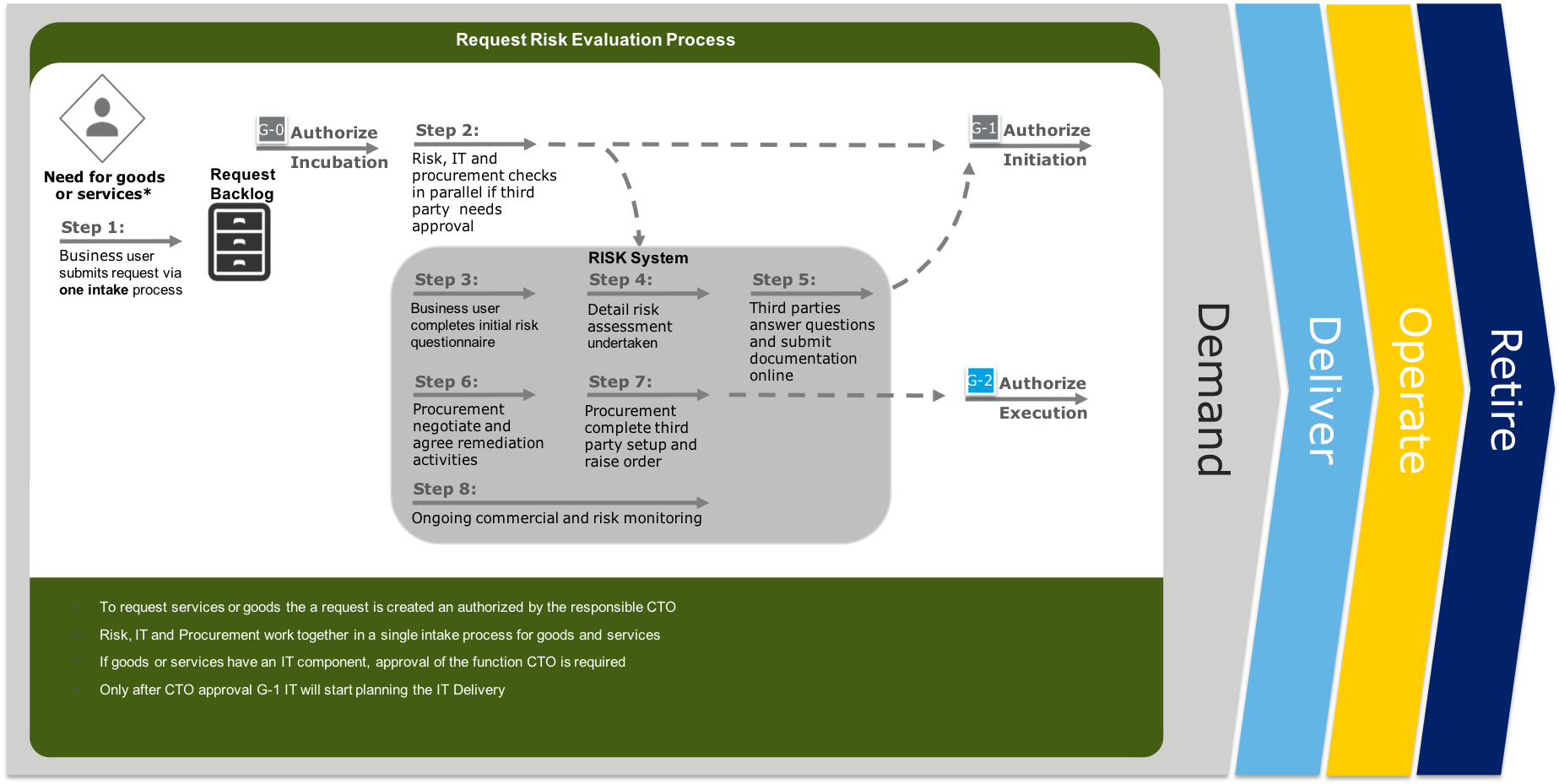

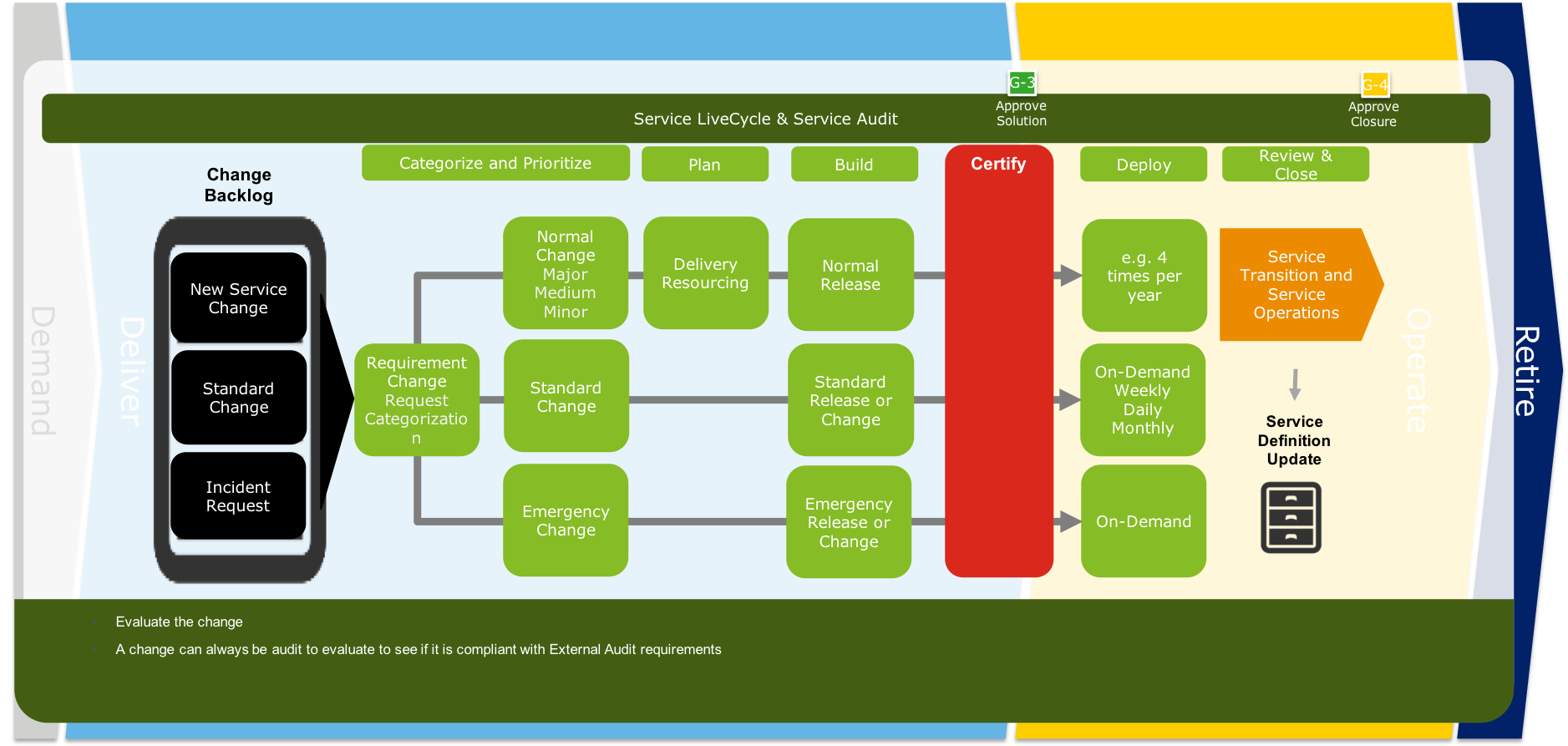

The Service Life Cycle start with the creation of a Service Plan by the Business Owner. When the business demands on the service are clear, a product request can be created. This request is validate and approved (G-0) by the CTO responsible for the business function. During the incubation phase a high level solution direction and cost estimation is made. This information is added to the product request. This request is again validated and approved (G-1) by the responsible CTO. After this approval the delivery is planned according to the selected delivery lane. When the requested service needs goods or external services then CTO is approval (G-2) is required before building of the services can start. During the certification phase, the mandatory tests are evaluate and only when successful or after exception sign-off of the CTO (G-3) the service will be deployed. After the last deployment for this service, the product request will be closed (G-4)

Service Demand

For every function group there will be regular portfolio meeting, where business and the alignment manager meet together defining which services are required by the business. For each service the CTO decides if IT should invert time creating a high level solution direction. During this incubation phase also all external, risk and compliancy task will be performed. When services has impact on more then one function group the proposal needs to evaluated by the Business IT council. If the cost of the services is above the mandate of the Business IT council it will be forwarded to the CIO for approval. After the product request has been updated with the required approval the CTO has to give his final approval.

When goods or external services are required an additional Risk and compliancy check needs to be done. Any purchasing activity needs to be completed (step 7) before (G-2)

Service Delivery

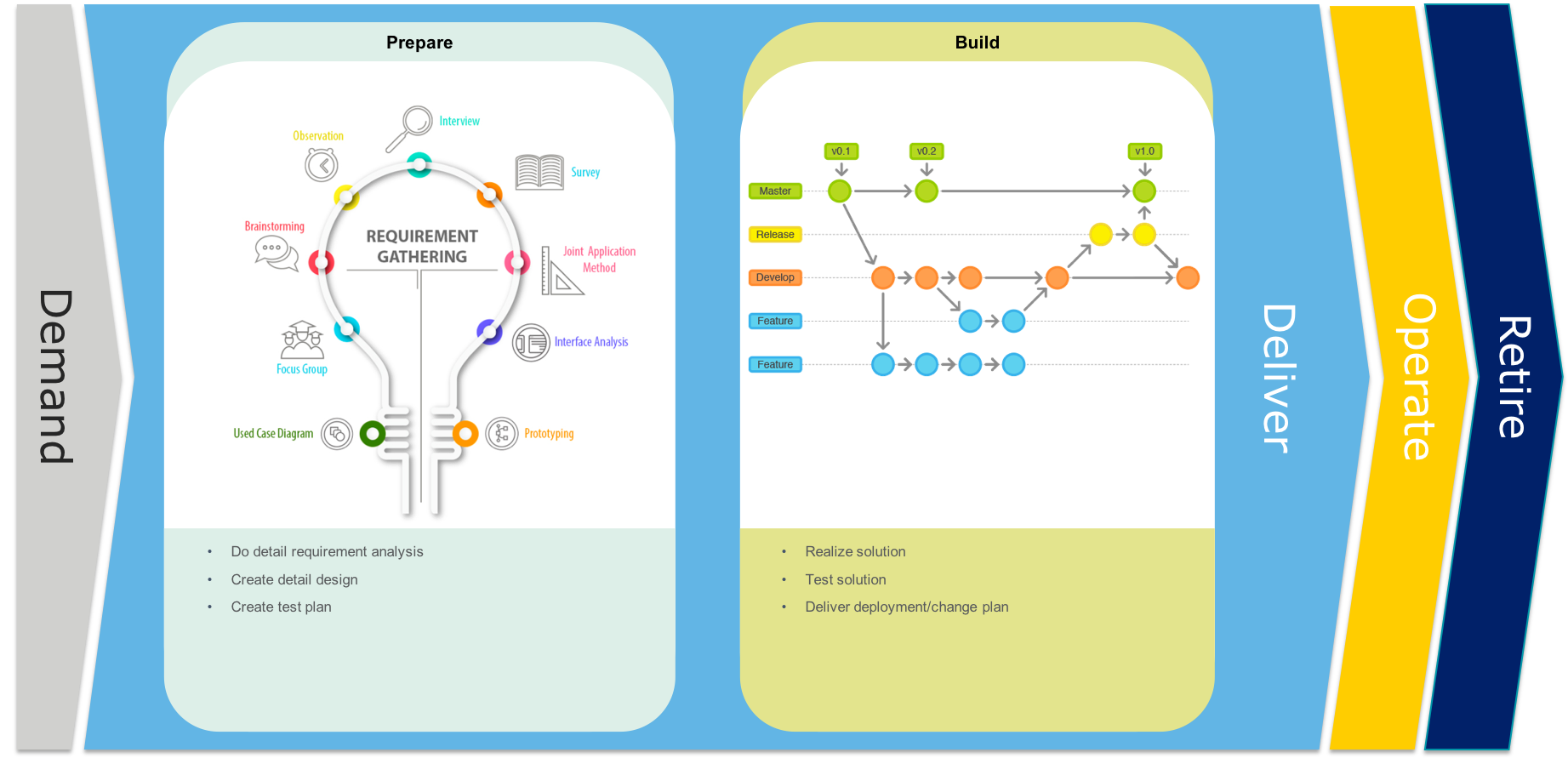

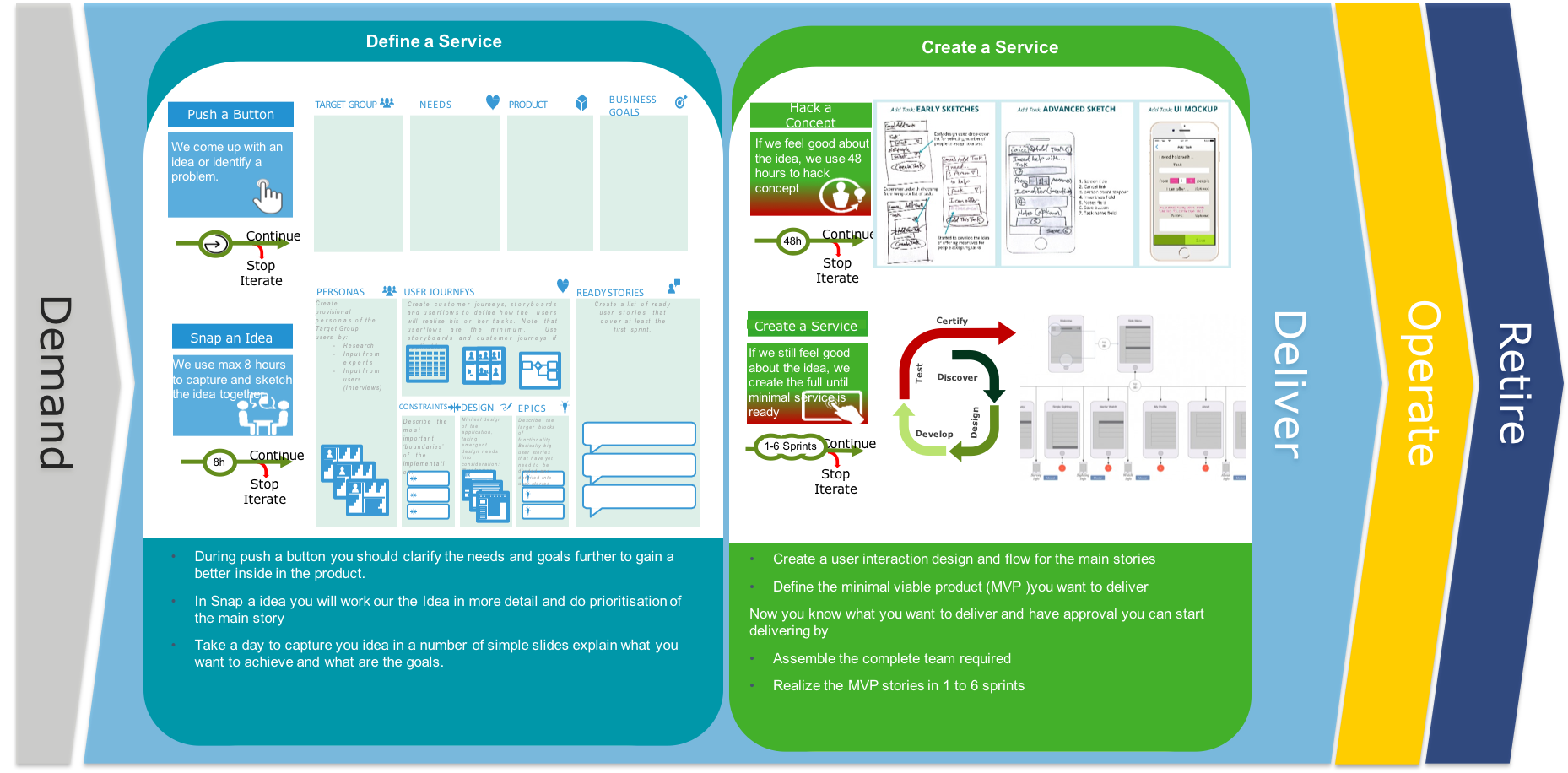

When for a services an traditional delivery model is chosen then during the preparation phase we will focus on requirements gathering and detail design. During the Build phase we will build one or more features and combine them later into on release.

User Stories: In consultation with the customer or product owner, the team divides up the work to be done into functional increments called “user stories.” Each user story is expected to yield a contribution to the value of the overall product.

Daily Meeting: Each day at the same time, the team meets so as to bring everyone up to date on the information that is vital for coordination: each team members briefly describes any “completed” contributions and any obstacles that stand in their way.

Incremental Development: Nearly all Agile teams favor an incremental development strategy; in an Agile context, this means that each successive version of the product is usable, and each builds upon the previous version by adding user-visible functionality.

Iterative Development: Agile projects are iterative insofar as they intentionally allow for “repeating” software development activities, and for potentially “revisiting” the same work products.

Team: A “team” in the Agile sense is a small group of people, assigned to the same project or effort, nearly all of them on a full-time basis. A small minority of team members may be part-time contributors, or may have competing responsibilities.

Milestone Retrospective: Once a project has been underway for some time, or at the end of the project, all of the team’s permanent members (not just the developers) invests from one to three days in a detailed analysis of the project’s significant events.

Personas: When the project calls for it - for instance when user experience is a major factor in project outcomes - the team crafts detailed, synthetic biographies of fictitious users of the future product: these are called “personas”.

Within the change process we focus on developing standard (preferred automated) service release process. We validate if all required documentation and test are provided. In case of a failure you should perform a root cause analysis and adopt require changes to the standard release process. The task of the changes manager will become more a facilitator of the process and auditor after the changes is completed.(more..)

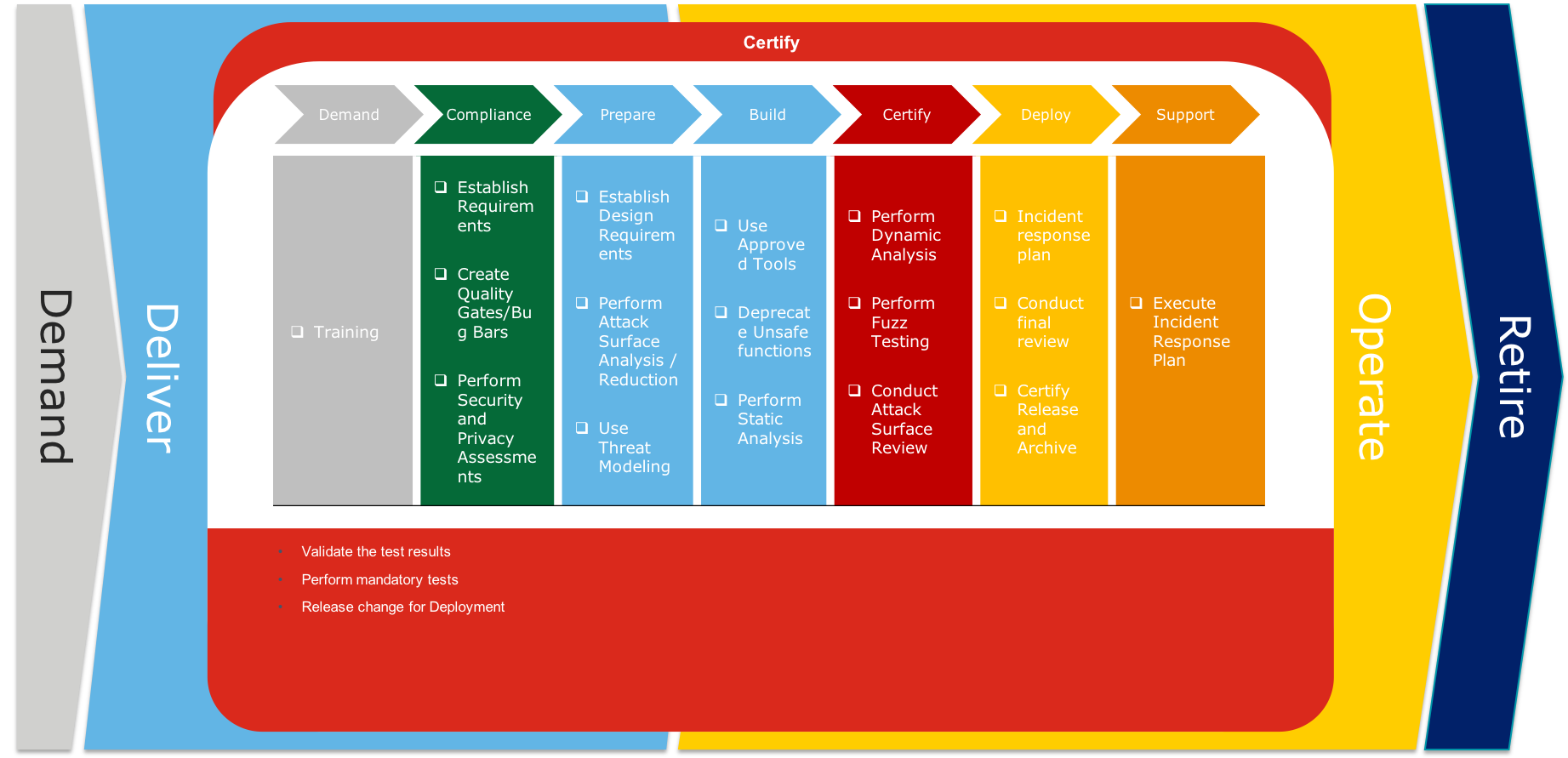

During certify we check if all required certifications steps are performed and completed successful. When not successful and it is required to continue an exception has to be provided by the CTO.

Service Operation

During the deploy phase the provides is preferable automatically deployed on the required infrastructure. The information to support the new/update services is communicated or trained to all impacted resources.

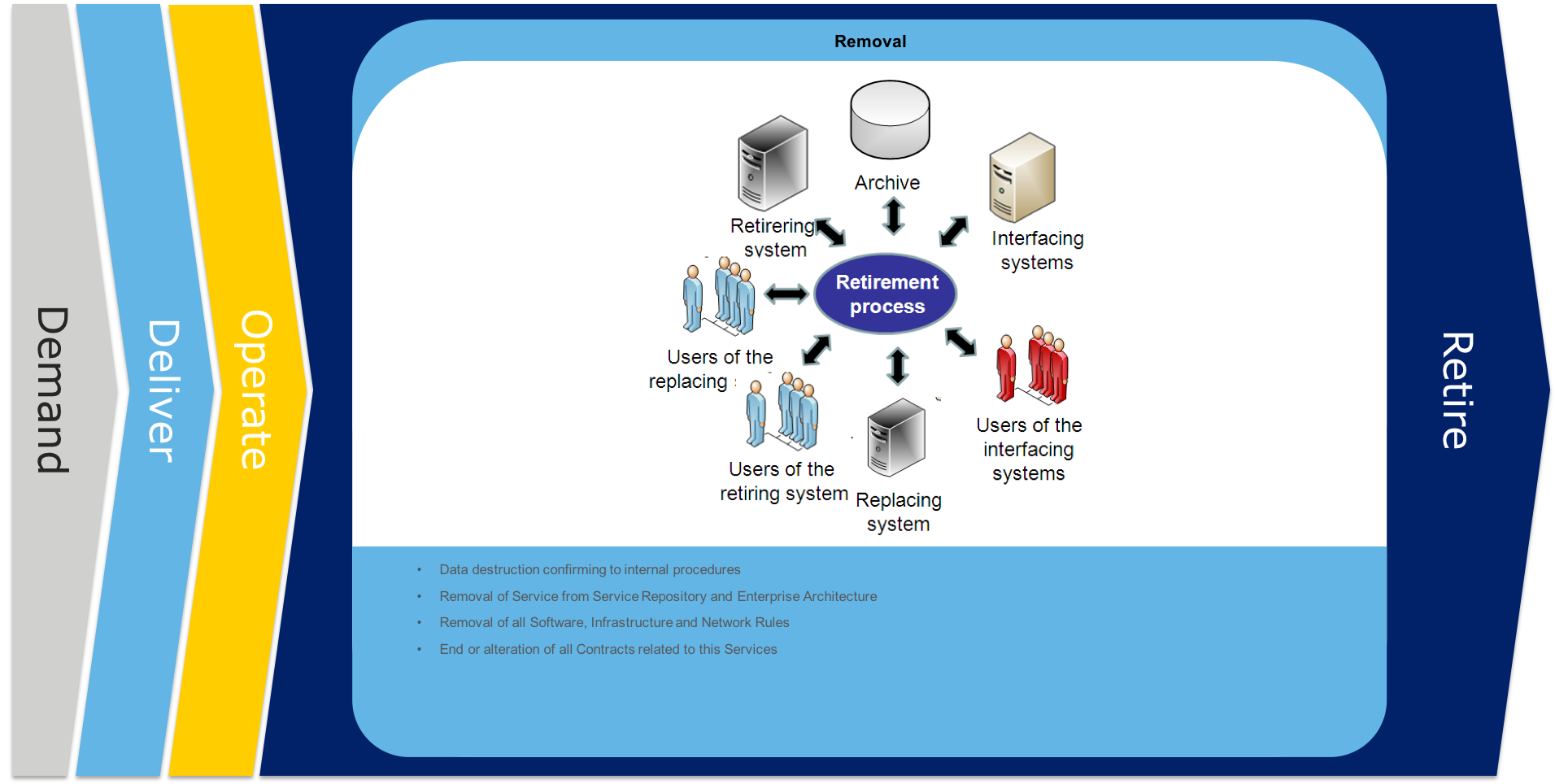

Service Retirement

When a service needs to be retired, all data should be archived or destroyed, people should be transferred to other jobs or let go. The services and assets should unregistered.

Focuses on your organisation capability to manage and prioritize IT investments, programs, and projects in alignment with your organisations business goals. Portfolio Management is an important mechanism for determining eligibility for workloads and for prioritizing services delivery. It serves as a focal point for life cycle management of both applications and services. Teams will need to develop new skills and processes to evaluate services and a workloads eligibility.

Development is coordinated by the Development Management Office (DMO), which also sets and promotes development practices for company-wide control, visibility and consistency. DMO has the mandate to classify and prioritize development initiatives to be approved or rejected by Development Portfolio Steering. DMO has control over resources, dependencies and the performance of major development initiatives, while providing the required support and consultation to maximize business benefits creation and minimize risks.

Many organizations have a Project Management Office (PMO) that handles all the same tasks as the DMO, but just for projects. As more and more development takes place beyond projects, it is recommended to have a full-scope DMO to replace the PMO.

Projects vary greatly in terms of targets, duration, budget, staffing and difficulty. Consequently, not all development initiatives require a project and they can be classified as a change. In all development, excluding a straightforward change, the following topics need to be managed:

Business Case validity Goals, scope and constraints Timetables and costs Tasks and deliverables Workloads and needed resources (internal and/or external) Compliance with Enterprise Architecture Quality and risks

Addresses the organisation capability to manage one or several related projects to improve organisational performance and complete the projects on time and on budget. Traditional waterfall methods of program and project management typically fail to keep up with the pace of iterative changes necessary for cloud adoption and operations. Program and Project Managers need to update their skills and processes to take advantage of the agility and cost management features of cloud services. Teams need to develop new skills in agile project management and new processes for managing agile-style projects

Addresses the organisation capability to measure and optimize processes in support of your organisation goals. Services offer the potential for organizations to rapidly experiment with new means of process automation and optimization. Leveraging this potential requires new skills and processes to define Key PerformanceIndicators (KPIs) and create processes to ensure consumption is mapped to business outcomes.

Defines your organisation capability to procure,distribute, and manage the licenses needed for IT systems, services, and software. The serve consumption model requires that teams develop new skills for procurement and license management and new processes for evaluating license needs.

The service delivery models that present the most software-licensing challenges are infrastructure as a service (IaaS) and platform as a service (PaaS). Software as a service (SaaS) is less likely to cause problems because, as the name suggests, the software is part of the cloud provider’s services. With IaaS and PaaS, though, the customer has shared control over what is run in the cloud environment, including third-party software. In the case of IaaS, the customer does not manage or control the underlying cloud infrastructure but may have control over operating systems and deployed applications. With PaaS, while the customer typically doesn’t have control over the operating system, it may have control over the deployed applications.

Where the complexity comes in is that software manufacturers are all over the map in how they address cloud use in their software licenses. Some base their licensing on the number of users, and those users in turn may be named or concurrent. Others charge per processor or core that the software runs on. Still others look at actual usage, a metric that is distinct from number of users. The one thing that these various licensing models have in common is that they are attempts to maximize revenue, and naturally, software makers view the use of their products in the cloud as an expansion of licensing rights that represents an opportunity for increased revenue.

Can the customer argue that the cloud does not represent an expansion of licensing rights? It would be difficult. If the customer acquired its software licenses from the vendor under a long-standing agreement, chances are good that the agreement pre-dates the inception of cloud computing. Of course, contracts generally do not address technology offerings that don’t exist at the time of the contract’s drafting, so a pre-cloud software-licensing contract is highly unlikely to contemplate the use of those licenses in a cloud environment. Legally, any rights that aren’t explicitly stated as being granted to the customer in the license agreement are retained by the software manufacturer. In cases like this, customers do not have any pre-existing rights to use their software licenses in the cloud.

Parsing the clauses

To better understand the challenges that the cloud brings to software licensing, it might be helpful to take a look at some clauses that one might see in a cloud vendor’s contract. Below are four, followed by my explanation of what they mean and why they’re pertinent.

Customer authorizes [VENDOR] to copy, install and modify, when necessary and as required by this Agreement, all third-party software to be used in the Services.

What this means: As part of providing the service, the cloud vendor may need to access the software in order to create redundant systems, and potentially to replicate or restore the customer environment in the event of an unplanned outage or other disaster. The above language says that the customer gives the cloud vendor permission to do these things on its behalf.

Customer warrants to [VENDOR] that it has obtained any licenses or approvals required to give [VENDOR] such rights or licenses to access, copy, distribute, use and/or modify or install any third-party software to be used in the Services.

What this means: This affirms that the customer’s license agreement with the software manufacturer includes the rights for the cloud vendor to access the software in the manner described above.

Some third-party software manufacturers’ contract terms and conditions may become void if [VENDOR] provides services for or works on the software (such as providing maintenance services). [VENDOR] DOES NOT TAKE RESPONSIBILITY FOR THIRD-PARTY WARRANTIES OR FOR ANY EFFECT THAT THE [VENDOR’S] SERVICES MAY HAVE ON THOSE WARRANTIES.

What this means: The cloud vendor is saying that if its use of the software in providing the services causes any noncompliance with the terms of the software-license agreement, then the cloud vendor is not responsible for any adverse consequences.

Third-party software shall be exclusively subject to the terms and conditions between the third-party software provider and Customer. [VENDOR] shall have no liability for third-party software.

What this means: The cloud vendor is saying that it has no responsibility regarding the effective functioning of the software, or any adverse impacts of any malfunctioning of the software.

All this adds up to the fact that you need to clearly identify your license rights and usage needs before deploying third-party software in the cloud, then effectively capture those in your contract with the cloud vendor.

Helps stakeholders understand how to update staff skills and organizational processes necessary to deliver, maintain, and optimize cloud solutions and services.

Common Roles: CTO; IT Managers; Solution Architects.

IT architects and designers use a variety of architectural dimensions and models to understand and communicate the nature of IT systems and their relationships. Organizations use the capabilities of the Platform Perspective to describe the structure and design of all types of cloud architectures. With information derived using this Perspective, you can describe the architecture of the target state environment in detail. The Platform Perspective includes principles and patterns for implementing new solutions on the cloud, and migrating on-premises workloads to the cloud.

Encompasses the organisation capability to provide processing and memory in support of enterprise applications. The skills and processes necessary to provision cloud services are very different from the skills and processes needed to provision physical hardware and manage data centre facilities. Many processes move from being focused on real-world logistics to being focused on virtual and fully automated processes.

Addresses the organisation capability to provide computing networks to support enterprise applications. Moving from hardware components to a networks of delivered services changes network provisioning significantly, and teams will need to develop new skills and processes to design, implement, and manage this transition.

Focuses on the organisation capability to provide storage in support of enterprise applications. Storage provisioning in the cloud is accomplished with cloud-based block and file storage. The skills and processes required to provision these services are significantly different from provisioning the physical storage area network (SAN), network-attached storage (NAS), and disk drives.

The Problem With Legacy Storage Provisioning

This need for better storage provisioning capabilities has lead the storage suppliers in the industry to add storage virtualisation capabilities to their legacy storage systems. But this virtualisation is often internal, meaning it is isolated to a single system and a single manufacturer. Internal storage virtualisation has simplified, to a degree, the storage provisioning process by allowing an administrator to simply select the size of the partition and letting the storage system do more of the work. With internal virtualisation the administrator will still receive every storage request, analyse the request and know where to provision that storage from. All of which becomes a bottleneck to service delivery.

It also leads to having multiple storage virtualisation software instances running as each system from each manufacturer has its own software that needs to be learned and interacted with. The CSP/MSP typically has a wide collection of storage hardware. This would be similar to having a different brand hypervisor loaded on every server and having to manage each of those separately.

Legacy provisioning as it is provided by internal storage virtualisation also requires that the administrator know which type of storage and storage system from which the provisioning will occur. The administrator needs to make the physical connection between the performance needs of the application and the storage environment’s available storage media types. They must know which media types and systems are best suited for each type of request.

Provisioning is More Than Capacity

The current internal storage virtualisation capabilities found in legacy systems are limited to the provisioning of capacity. Storage, like servers, has more than just one resource and applications will use those resources differently depending on the situation. Storage resources include the storage CPU, storage controller memory, internal cache management and network bandwidth in addition to physical capacity required. The combination and control of these resources represent the amount of IOPS (input/output operations per second) or throughput and storage latency that a storage system can deliver. But as is the case with storage capacity not all servers or applications need the same amount of IOPS or storage latency. Legacy storage systems simply don’t provide a granular way to allocate performance within a storage system.

The lack of the ability to provision performance plagues even more modern storage systems as well as storage virtualisation software that claims to be designed for the highly visualised data centre. Reality is that these systems may be appropriate for those situations but are not able to meet the provisioning needs of the CSP/MSP.

Provision Requirements of the CSP/MSP

The CSP/MSP is foreshadowing what the enterprise will become in the near future; a data centre that is judged on its ability to respond rapidly to an ever growing and ever demanding user base. In the case of a CSP/MSP these “users” are accounts that pay a monthly fee and have specific service level agreements (SLA) requirements of the CSP/MSP. The speed at which provisioning can be performed and the ability for that provisioning rule to be maintained over time is the foundational component in meeting those SLAs.

Self-Serviced Provisioning

For the CSP/MSP to be profitable they cannot afford to hire administrators every time a new account is brought on or even after 100 accounts are brought on. Instead they need to be able to safely delegate provisioning to the account while maintaining oversight. This means allocating a certain amount of capacity and IOPS/throughput/latency per account and then allowing the account to divide up those services based on need.

Self monitoring also may be a need in many cases. The account wants to know how much he is using on which application at what time. This will help them to better manage their applications running at a CSP/MSP.

Manages User’s Expectations

A key challenge with not being able to provision IOPS in legacy systems is that the performance experience can not be controlled. This creates an expectations problem because users that sign up for a bronze service level get the same performance experience that a gold service level gets.

Even if different class systems are used to allocate the performance resources, the first set of users on a system will experience a higher than promised level of performance and then see their performance degrade as more accounts are added to a system. The CSP/MSP needs the ability to guarantee a certain level of performance, no more, no less, so that users expectations can be managed.

This level of performance needs to remain constant, so the performance that the user sees from their assigned storage is the same today as it will be a year from now. Changes to the environment and even the storage system itself should not impact the user nor jeopardize the SLA.

Efficient Provisioning

The MSP/CSP needs to balance the cost advantages of maximizing storage resources with the customer satisfaction risks associated with extending a system too far. They need a storage system that will allow them to granularly assign capacity and performance resources so that these systems can be taken to their maximum capabilities without risking customer satisfaction.

Essentially each available GB and IOPS needs to be bought and paid for prior to investing in an additional system. This allows the addition of new storage investment to be trended based on the rate that resources are being consumed on present systems. In short the storage environment needs to scale like the CSP/MSPs business scales.

When a customer or account demands storage with varying levels of performance and capacity, they may need to be provisioned from different storage systems. The administrator needs to know which storage system has how much capacity and performance left out. When manually managed by the administrator, storage fragmentation occurs, usually.

Storage fragmentation is a phenomenon in which large number of storage systems have the ability to provide a certain type of storage but none of the storage system is capable of providing one type of storage. For example, if there are 10 storage systems in the infrastructure and the CSP/MSP admin provisions 5TB/1000 IOPS volumes equally on all of them, when the system is 70% full, it may not be possible to provision 5TB/20000 IOPS, as this needs writing across large number of disks, but the disks are 70% full. Intelligent and automated provisioning guidelines will help avoid such a scenario.

Multi-Vendor, Multi-Tier Provisioning

CSP/MSP also need the storage system to provide this provisioning along with other storage services like thin provisioning, snapshots, cloning and replication, across multiple storage platforms, even those from different vendors. This allows the CSP/MSP to manage their entire storage environment from a single interface regardless of the manufacturer of the individual platform. Performance can then be allocated intelligently across platforms by finding the storage system with the storage resources that best match the IOPS requirement. It also saves the MSP/CSP from the vendor lock in associated with buying a single vendors system, giving them flexibility to select storage systems based on suitability to the task at hand.

Introducing Elastic Provisioning

Elastic provisioning is the ability to provision both capacity and performance resources data centre wide from a single interface. It models the server virtualisation concept by deploying a series of off the shelf servers to act as physical storage controllers. The storage in the environment is then assigned to these storage controllers. Since these controllers are abstracted from the physical storage they can manage a mixed storage vendor environment.

Elastic storage provides the ability to spawn virtual controllers similar to how a server host spawns virtual servers. Each of these virtual controllers is assigned to an account. Capacity and IOPS/Throughput/Latency, based on the needs of the account, are then assigned to the virtual controller. The account can then sub-divide the capacity and SLA parameters based on the needs of each of its applications.

This virtual controller functionality ensures that a misbehaving application at one account won’t impact the capacity or performance needs of another account. There is complete isolation. It also insures that data can be segregated between accounts, another common concern in the CSP/MSP.

From CSP to the Enterprise

It is easy to see how the enterprise could leverage these capabilities as well. Instead of accounts, different lines of business or application groups could be assigned virtual storage controllers. Those groups could then manage their own storage without risk to the other groups. As is the case with CSP/MSPs the enterprise also has a mix of storage systems and could benefit from a centralized controller cluster.

Summary

Provisioning of storage remains a key challenge in data centers of all types and sizes but it is especially problematic for the CSP/MSP. It becomes THE bottleneck in rapidly responding to customer requests and its limitations make it difficult to guarantee long term adherence to SLAs. Elastic provisioning is a viable solution to this problem. It provides for multi-vendor provisioning of both capacity and performance resources.

Addresses the organisation capability to provide database and database management systems in support of enterprise applications. The skills and processes supporting this capability change significantly from managing hardware-bound and cost-bound databases to provisioning standard relational database management systems (RDMS) in the cloud and leveraging cloud-native databases.

Database provisioning for development work isn’t always easy. The better that development teams meet business demands for rapid delivery and high quality, the more complex become the requirements for the work of development and testing. More databases are required for testing and development, and they need to be more rapidly kept current. Data and loading needs to match more closely what is in production.

When more than one developer is working on a development database, it is wise to ensure that developers can easily set up, or provision, their own versions of the current build of the database as a part of having an isolated development environment. By providing a separate copy of the current version of the database, it is easier to ensure that any one person working on code doesn’t break the work being done by others. This goes some way to support a DevOps approach to database development. It doesn’t remove the need for integration and integration testing, but it improves the individual developer’s ability to get work done unhindered by the overhead of team-based database development.

Provisioning any type of server environment for this type of isolated development is tricky, whether it involves web servers, active domains or email servers, but when we add databases to the provisioning story, as with just about everything with databases, the situation becomes more challenging.

Challenges of Provisioning Database

As soon as you start to automate the process of creating databases for developers you’re going to hit a number of issues. Each of these makes it more difficult to create an automated, hopefully self-service, method of provisioning a database. Ideally, you’d like the developers to manage this themselves via an automated system but there are plenty of roadblocks on the route to that Eldorado.

Size of Database

It is quick and easy to automate the simple process of creating an empty database. However, the production environment will never involve just an empty database, and the developer will have to be certain that the database will work with a volume of data with the same characteristics, distribution and size as the production data. Basically, you’re going to need that sort of data for at least part of the development process. That data is going to have to be at least representative of your production data, though you’d be unlikely to be able to use a copy of it (see more on Production Data in the section below). This means you’re moving more than a few rows. Most databases these days are at least hundreds of gigabytes in size and may run into many terabytes or more.

The size of the data presents two immediate challenges to provisioning. First, you need to have the space available to provide this for each of the developers. For relatively small databases of 20-50gb, this is no big deal nowadays, but the more production-like the volume of data gets to be, the larger the size of disk-space required. Second, as the sizes increase, it makes provisioning slower and slower in an almost linear fashion. Restoring or migrating more data simply takes more time.

Timing of Provisioning Refresh

When you have several developers working on a single development database, but working with different parts of that database in varying degrees of completion for any given piece of functionality, one developer may want to check in his work and get a fresh build of the database, while the others haven’t completed their code or even set up adequate unit testing for the new functionality yet and need to remain on the current version increment of the database. Add in a testing team or even multiple development teams and this problem multiplies. You could just hope that they are all happy with the same set of data, but what if someone needs an extreme version for scalability tests, or run integration tests on a process, using a known input set of data?

As long as the individual is responsible for their own database, they can largely ignore the current status of other databases, however, as soon as integration has to occur, multiple versions of test data, structures or code could cause severe difficulty.

Production Data

In theory, the very best data for testing aspects of the functionality to ensure that it will work when the code gets to production is the data from production. However, data is becoming more and more tightly regulated, up to and including the actual threat of prison time for intentionally sharing data with unauthorized people.

Even if there were no need for regulatory compliance, it would be crazy to allow your developers to have production data on their development laptops. These are likely to leave the building regularly and could be, and often are, lost or stolen. The information managed within these databases defines many modern businesses, so losing something like a database of customers to the competition could be crippling.